At AWS’s re:Invent, AWS’s chief Adam Selipsky, subtly called out OpenAI’s security flaws, while introducing its security and safety features in Amazon Bedrock.

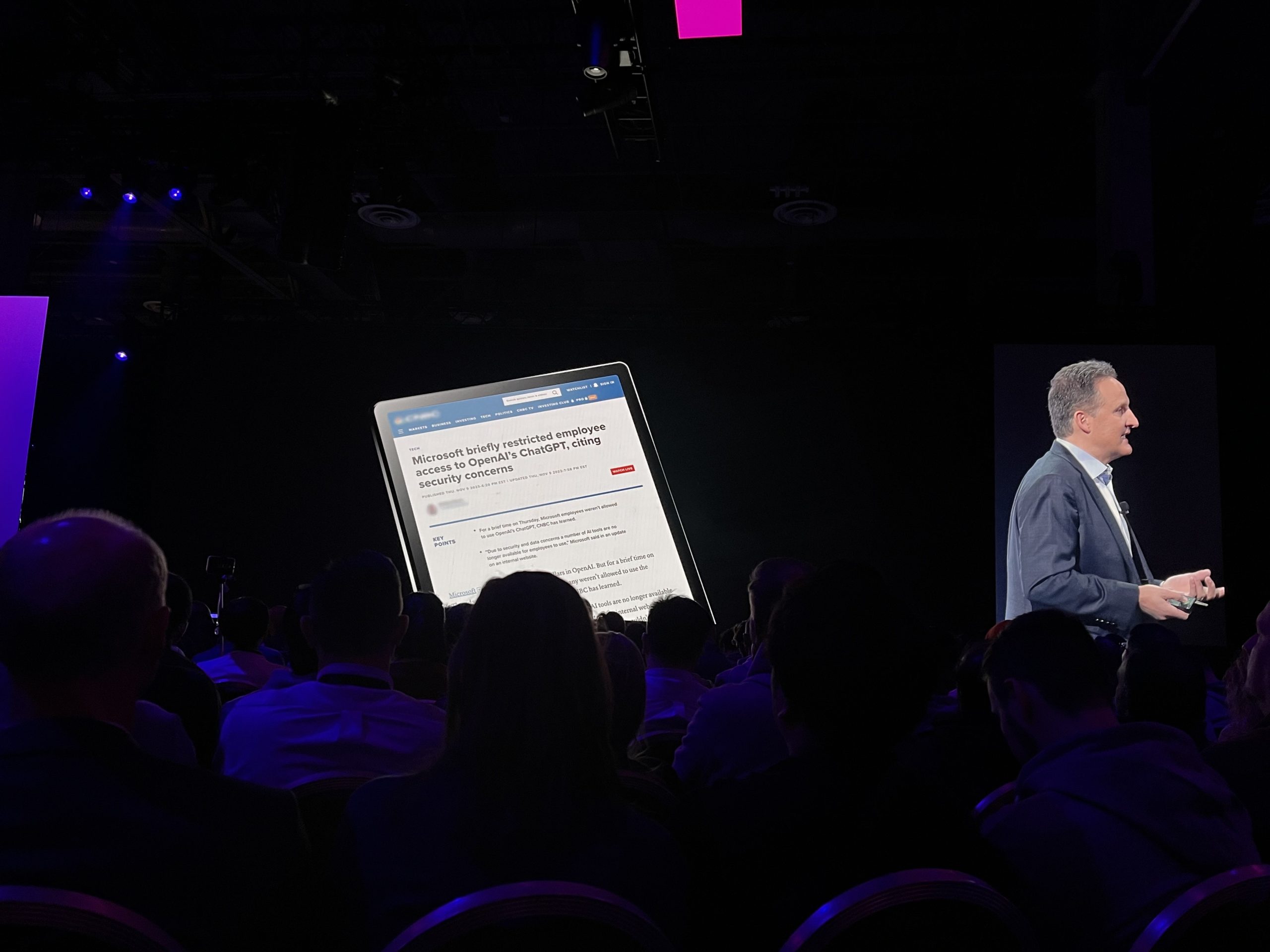

Citing CNBC’s report, ‘Microsoft briefly restricted employee access to OpenAI’s ChatGPT, citing security concerns,‘ as part of his slide, Selipsky introduced Guardrails for Amazon Bedrock.

He stressed on the importance of responsible AI, and how AWS has been integrated this into its platform from day one, “An important component of responsible AI is promoting the interaction between consumers and the applications to avoid harmful outcomes, and the easiest way to do this is actually placing limits on what information models can and can’t do,” shared Selipsky.

“With Guardrails for Amazon Bedrock, you can consistently implement safeguards to deliver relevant and safe user experiences aligned with your company policies and principles.” the company said in its blog post.

Guardrails enable users to set restrictions on topics and apply content filters, eliminating undesirable and harmful content from interactions within applications. This provides an additional layer of control beyond the safeguards inherent in foundation models (FMs).

Guardrails can be applied to all LLMs in Amazon Bedrock, encompassing fine-tuned models and Agents for Amazon Bedrock.

“OpenAI has a unique perspective on safety, driven by scientific measurement and lessons from iterative deployment”, OpenAI’s former board member Greg Brockman posted on X minutes after AWS’s Guardrails were announced.

OpenAI has consistently emphasised that it refrains from using API data for training its models. In an effort to build trust with enterprises, the AI startup introduced ChatGPT Enterprise earlier this year.

The post AWS Subtly Calls Out OpenAI ChatGPT Security Flaws, Introduces Bedrock Guardrails appeared first on Analytics India Magazine.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)