Common controls in place to fight financial fraud—anti-money laundering (AML) measures and know-your-customer (KYC) requirements—may have met their match in AI.

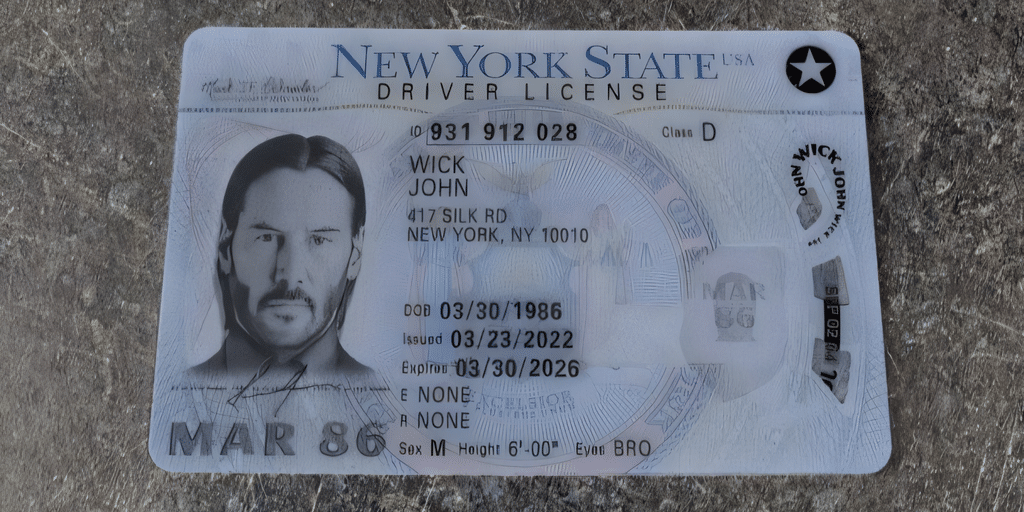

An underground service called OnlyFake is leveraging “neural networks” to craft high-quality fake IDs, and according to a 404 Media report, anyone can get instantly generated fake IDs with startling realism for just $15, potentially facilitating a range of illicit activities.

The original OnlyFake Telegram account, its primary customer-facing platform, was shut down. But its new account declares the end of the Photoshop era, boasting its capability to mass-produce documents using advanced “generators.”

The site’s owner, using the alias John Wick, said that the service can batch generate hundreds of documents from an Excel dataset.

The images are so good that 404 Media was able to get past the KYC measures of OKX, a cryptocurrency exchange that uses the third-party verification service Jumio to verify its customers’ documents. A cybersecurity researcher told the news outlet that users were sending OnlyFake IDs to open bank accounts and get their crypto accounts unbanned.

How it’s done

The OnlyFake service is using largely basic AI technology, albeit with great sophistication.

Using Generative Adversarial Networks (GANs), a form of AI, developers can design one neural network that is optimized to deceive another that is built to detect fake generations. With every interaction, however, both networks evolve and get better at making and detecting fakes.

Another approach is to train a diffusion-based model with a massive, well-curated dataset of real IDs. These models are adept at synthesizing extremely realistic images by training on vast datasets of specific items. They learn to replicate minute details that make the fakes nearly indistinguishable from authentic documents, outwitting traditional forgery detection methods.

Should you risk it?

For those looking to avoid having to use their real identities, OnlyFake is likely tempting. But engaging the service brings with it both ethical and legal questions. Beneath the veneer of anonymity and appeal of easy access, these operations stand on very shaky ground.

Because it dispenses fake IDs from many countries—including the U.S., Italy, China, Russia, Argentina, the Czech Republic, and Canada—the openly criminal enterprise has undoubtedly caught the eye of law enforcement agencies around the globe.

Big Brother may already be watching, in other words.

The risks go further than that. John Wick, for example, could be keeping a list of clients—this would be catastrophic for OnlyFake and its users. In addition, the new OnlyFake Telegram group has over 600 members, most of which could be traced back to their linked phone numbers.

And while obvious, it bears mentioning that paying OnlyFake with traditional digital payment methods is a big no go.

Even though cryptocurrency payments provide a layer of privacy, it is not completely secure from identity exposure, either. Countless services claim to be able to track crypto transactions, and thus digital currencies are beginning to lose the anonymity associated with them.

And no, OnlyFake doesn’t take the crypto privacy token Monero.

Most importantly, the purchase of a fake ID is in direct contradiction of AML and KYC policies, which are at least ostensibly in place to combat terrorist financing and other criminal activities.

Business may be booming, but are the possible ramifications worth the quick, affordable convenience?

Evolving regulations

Regulators are already trying to tackle this new threat. On January 29, the U.S. Commerce Department proposed a set of rules titled, “Taking Additional Steps To Address the National Emergency With Respect to Significant Malicious Cyber-Enabled Activities.”

The department wants to require infrastructure providers to report foreign persons trying to train large AI models regardless of reason—but certainly cases where deepfakes could be used in fraud or espionage are the focus.

These measures may still not be enough.

“KYC’s downfall was inevitable with AI now crafting fake IDs that breeze through verifications,” Torsten Stüber, CTO of Satoshi Pay, said on Twitter. ”It’s time for a shift: If rigorous regulation is a must, governments need to ditch outdated bureaucracy for cryptographic tech, enabling secure, third-party identity verification.”

Of course, AI use in deception stretches beyond fake IDs. Telegram bots now offer services ranging from custom deepfake videos, where individuals’ faces are superimposed onto existing footage, to creating non-existent nude images of people, known as deepnudes.

Unlike earlier services, these don’t require much knowledge or even powerful hardware—making the technology widely available without even having to download free image editing tools.

Edited by Ryan Ozawa.

Stay on top of crypto news, get daily updates in your inbox.

Disclaimer

We strive to uphold the highest ethical standards in all of our reporting and coverage. We StartupNews.fyi want to be transparent with our readers about any potential conflicts of interest that may arise in our work. It’s possible that some of the investors we feature may have connections to other businesses, including competitors or companies we write about. However, we want to assure our readers that this will not have any impact on the integrity or impartiality of our reporting. We are committed to delivering accurate, unbiased news and information to our audience, and we will continue to uphold our ethics and principles in all of our work. Thank you for your trust and support.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)