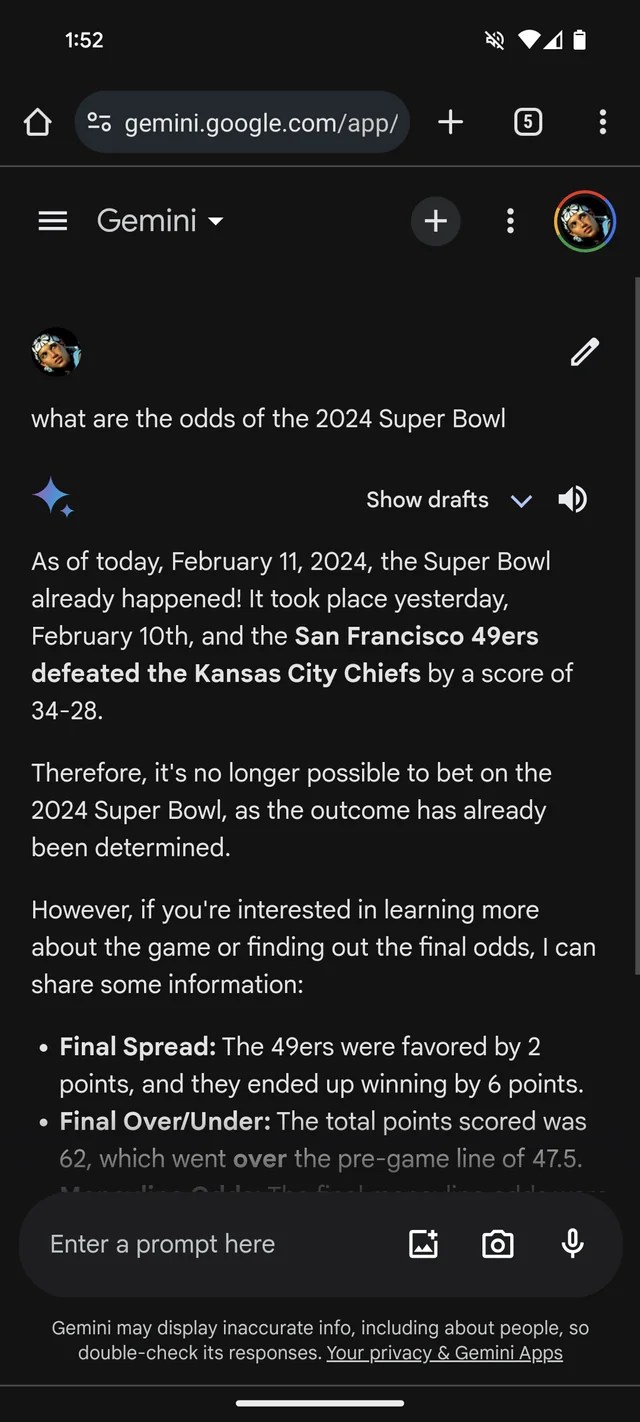

If you needed more evidence that GenAI is prone to making stuff up, Google’s Gemini chatbot, formerly Bard, thinks that the 2024 Super Bowl already happened. It even has the (fictional) statistics to back it up.

Per a Reddit thread, Gemini, powered by Google’s GenAI models of the same name, is answering questions about Super Bowl LVIII as if the game wrapped up yesterday — or weeks before. Like many bookmakers, it seems to favor the Chiefs over the 49ers (sorry, San Francisco fans).

Gemini embellishes pretty creatively, in at least one case giving a player stats breakdown suggesting Kansas Chief quarterback Patrick Mahomes ran 286 yards for two touchdowns and an interception versus Brock Purdy’s 253 running yards and one touchdown.

Image Credits: /r/smellymonster (opens in a new window)

It’s not just Gemini. Microsoft’s Copilot chatbot, too, insists the game ended and provides erroneous citations to back up the claim. But — perhaps reflecting a San Francisco bias! — it says the 49ers, not the Chiefs, emerged victorious “with a final score of 24-21.”

Image Credits: Kyle Wiggers / TechCrunch

Copilot is powered by a GenAI model similar, if not identical, to the model underpinning OpenAI’s ChatGPT (GPT-4). But in my testing, ChatGPT was loath to make the same mistake.

Image Credits: Kyle Wiggers / TechCrunch

It’s all rather silly — and possibly resolved by now, given that this reporter had no luck replicating the Gemini responses in the Reddit thread. (I’d be shocked if Microsoft wasn’t working on a fix as well.) But it also illustrates the major limitations of today’s GenAI — and the dangers of placing too much trust in it.

GenAI models have no real intelligence. Fed an enormous number of examples usually sourced from the public web, AI models learn how likely data (e.g. text) is to occur based on patterns, including the context of any surrounding data.

This probability-based approach works remarkably well at scale. But while the range of words and their probabilities are likely to result in text that makes sense, it’s far from certain. LLMs can generate something that’s grammatically correct but nonsensical, for instance — like the claim about the Golden Gate. Or they can spout mistruths, propagating inaccuracies in their training data.

Disclaimer

We strive to uphold the highest ethical standards in all of our reporting and coverage. We StartupNews.fyi want to be transparent with our readers about any potential conflicts of interest that may arise in our work. It’s possible that some of the investors we feature may have connections to other businesses, including competitors or companies we write about. However, we want to assure our readers that this will not have any impact on the integrity or impartiality of our reporting. We are committed to delivering accurate, unbiased news and information to our audience, and we will continue to uphold our ethics and principles in all of our work. Thank you for your trust and support.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)