The European Parliament voted Wednesday to adopt the AI Act, securing the bloc pole-position in setting rules for a broad sweep of artificial intelligence-powered software — or what regional lawmakers have dubbed “the world’s first comprehensive AI law”.

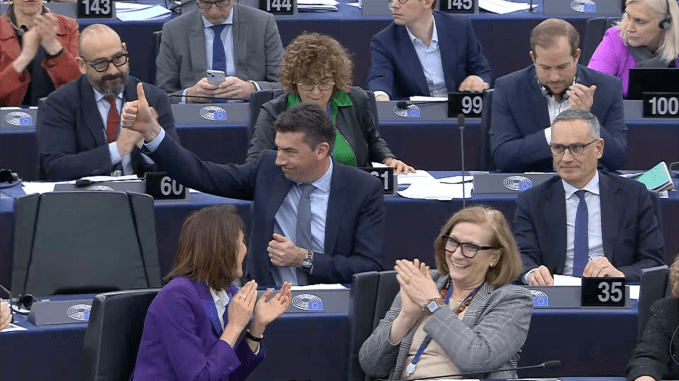

MEPs overwhelmingly backed the provisional agreement reached in December in trilogue talks with the Council, with 523 votes in favor vs just 46 against (and 49 abstentions).

The landmark legislation sets out a risk-based framework for AI; applying various rules and requirements depending on the level of risk attached to the use-case.

The full parliament vote today follows affirmative committee votes and the provisional agreement getting the backing of all 27 ambassadors of EU Member States last month. The outcome of the plenary means the AI Act is well on its way to soon becoming law across the region — with only a final approval from the Council pending.

Disclaimer

We strive to uphold the highest ethical standards in all of our reporting and coverage. We StartupNews.fyi want to be transparent with our readers about any potential conflicts of interest that may arise in our work. It’s possible that some of the investors we feature may have connections to other businesses, including competitors or companies we write about. However, we want to assure our readers that this will not have any impact on the integrity or impartiality of our reporting. We are committed to delivering accurate, unbiased news and information to our audience, and we will continue to uphold our ethics and principles in all of our work. Thank you for your trust and support.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)