If you haven’t yet watched yesterday’s OpenAI event, I highly recommend doing so. The headline news was that the latest GPT-4o model works seamlessly with any combination of text, audio, and video.

That includes the ability to ‘show’ the GPT-4o app a screen recording you are taking of another app – and it’s this capability the company showed off with a pretty insane iPad AI tutor demo …

GPT-4o

OpenAI said that the ‘o’ stands for ‘omni.’

lockquote class=”wp-block-quote is-layout-flow wp-block-quote-is-layout-flow”>

GPT-4o (“o” for “omni”) is a step towards much more natural human-computer interaction—it accepts as input any combination of text, audio, and image and generates any combination of text, audio, and image outputs.

It can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time(opens in a new window) in a conversation […] GPT-4o is especially better at vision and audio understanding compared to existing models.

lockquote>

Even the voice aspect of this is a big deal. Previously, ChatGPT could accept voice input, but it converted it to text before working with it. GPT-4o, in contrast, actually understands speech, so completely skips the conversion stage.

As we noted yesterday, free users also get a lot of features previously limited to paying subscribers.

AI iPad tutor demo

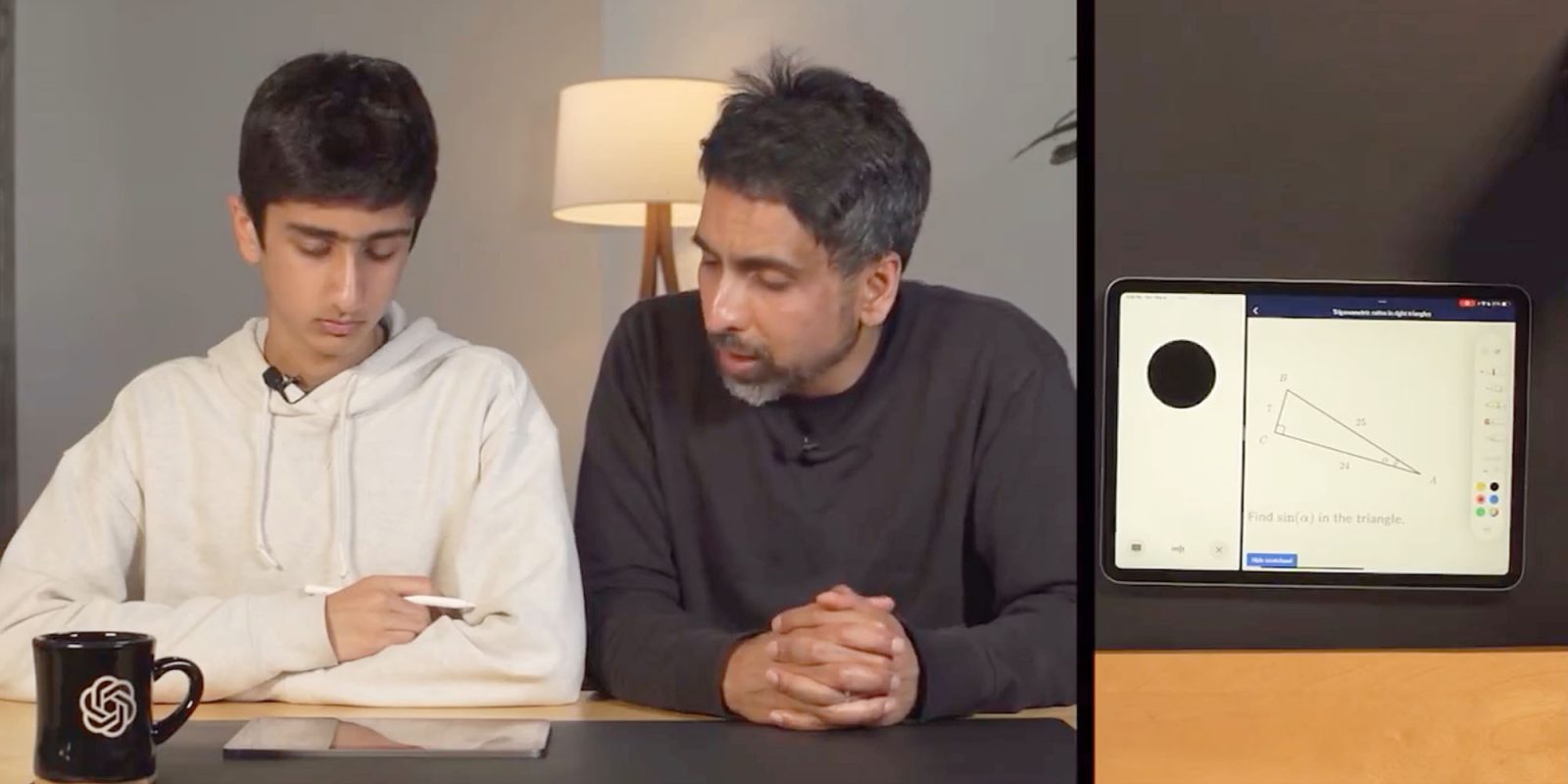

One of the capabilities OpenAI demonstrated was the ability of GPT-4o to watch what you’re doing on your iPad screen (in split-screen mode).

The example shows the AI tutoring a student with a math problem. You can hear that, initially, GPT-4o understood the problem and wanted to immediately solve it. But the new model can be interrupted, and in this case it was asked to help the student solve it himself.

Disclaimer

We strive to uphold the highest ethical standards in all of our reporting and coverage. We StartupNews.fyi want to be transparent with our readers about any potential conflicts of interest that may arise in our work. It’s possible that some of the investors we feature may have connections to other businesses, including competitors or companies we write about. However, we want to assure our readers that this will not have any impact on the integrity or impartiality of our reporting. We are committed to delivering accurate, unbiased news and information to our audience, and we will continue to uphold our ethics and principles in all of our work. Thank you for your trust and support.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)