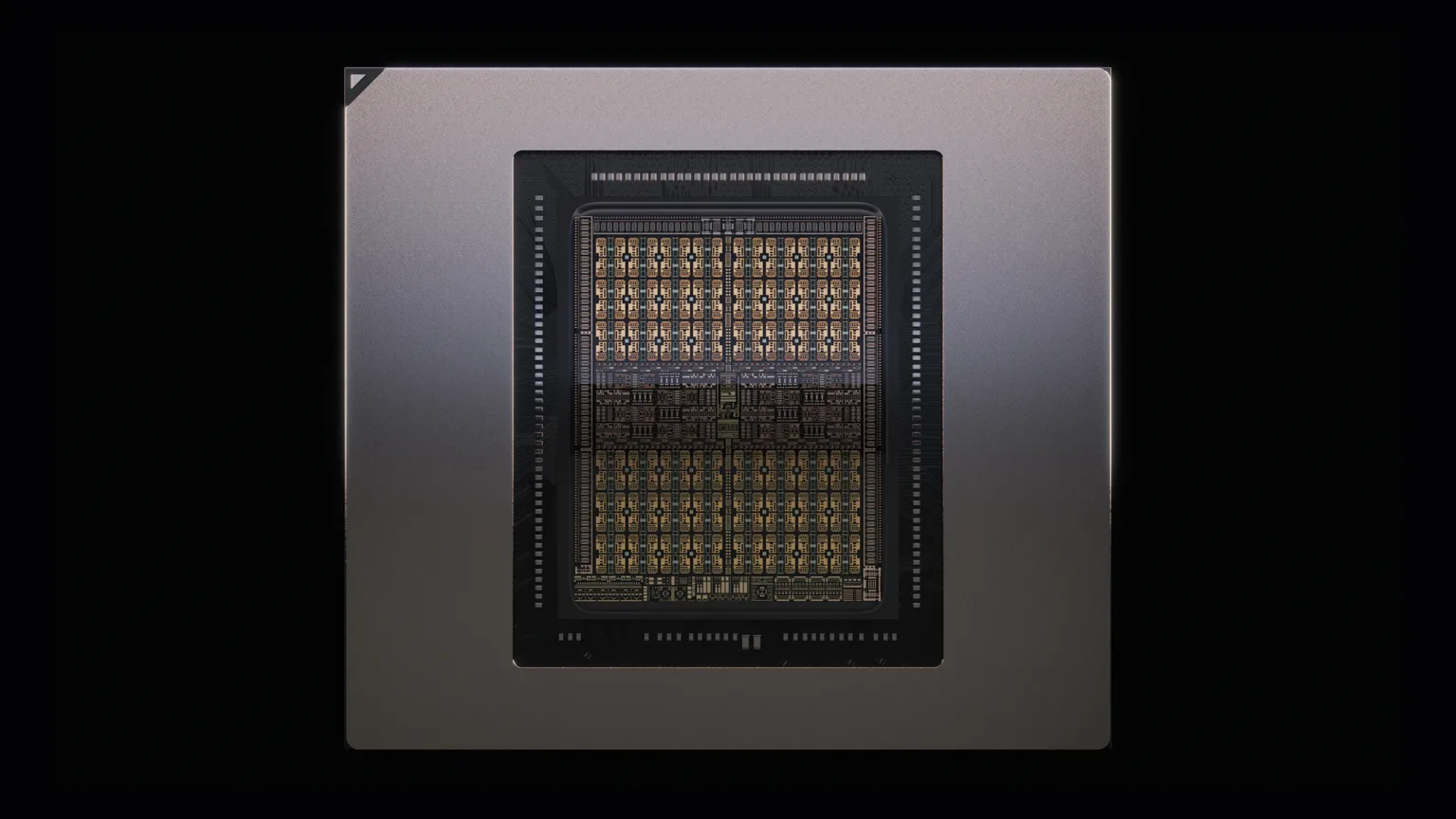

Nvidia unveiled the data-analytics-id=”inline-link” href=”https://www.tomshardware.com/tech-industry/semiconductors/nvidia-rubin-cpx-forms-one-half-of-new-disaggregated-ai-inference-architecture-approach-splits-work-between-compute-and-bandwidth-optimized-chips-for-best-performance” data-before-rewrite-localise=”https://www.tomshardware.com/tech-industry/semiconductors/nvidia-rubin-cpx-forms-one-half-of-new-disaggregated-ai-inference-architecture-approach-splits-work-between-compute-and-bandwidth-optimized-chips-for-best-performance”>Rubin CPX GPU earlier this week at the AI Technology Conference as part of its next-generation data center portfolio, which is designed to accelerate inference workloads. Rubin CPX, in particular, focuses on compute rather than bandwidth to form the other part of a “disaggregated” AI architecture that will kick off with Vera Rubin next year. Today, however, a closer look at its silicon suggests there might be more to this AI accelerator than Nvidia has let on, with some speculating…

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)