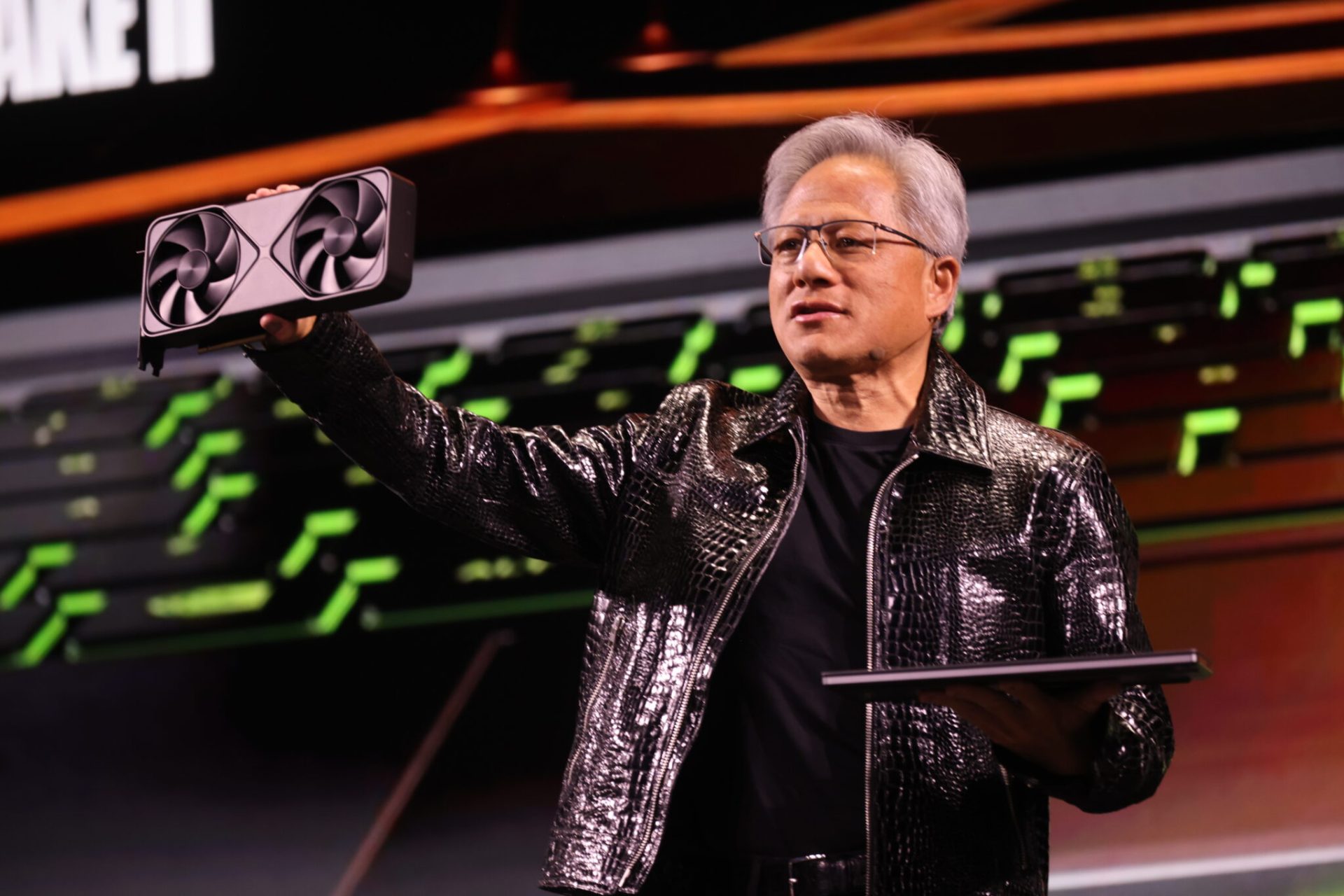

Speaking at the Consumer Electronics Show in Las Vegas, Jensen Huang, CEO of Nvidia, said the company’s next generation of AI chips is now in full production, marking a major milestone in its push to stay ahead in the increasingly competitive AI hardware market. According to Huang, the new platform is capable of delivering up to five times the artificial intelligence computing performance of Nvidia’s previous generation when powering chatbots and other AI-driven applications.

The announcement comes as Nvidia faces mounting pressure not only from traditional rivals, but also from its own customers, many of whom are building custom silicon to reduce dependence on Nvidia’s dominant AI stack.

Inside the Vera Rubin Platform

At the centre of Nvidia’s next phase is the Vera Rubin platform, a modular system built from six separate Nvidia chips. The flagship server configuration will debut with 72 graphics processing units (GPUs) and 36 central processing units (CPUs), representing one of the densest AI compute designs Nvidia has ever produced.

Huang demonstrated how these servers can be linked together into large-scale “pods” containing more than 1,000 Rubin chips, designed to serve massive AI workloads efficiently. According to Nvidia, these pods can improve the efficiency of generating AI “tokens”—the fundamental units of output in large language models—by as much as 10 times, a key metric for real-world chatbot performance.

Nvidia executives said the Rubin systems are already being tested in company labs by AI firms, ahead of broader availability later this year.

Performance Gains Through Proprietary Data

One of the more controversial aspects of the Rubin platform is how it achieves its performance leap. Huang explained that the new chips rely on a proprietary form of data representation that allows Nvidia to extract far more performance from a relatively modest increase in transistor count.

“This is how we were able to deliver such a gigantic step up in performance, even though we only have 1.6 times the number of transistors,” Huang said. Nvidia hopes the broader industry will eventually adopt this data format, though its proprietary nature could further entrench Nvidia’s ecosystem advantage.

Rising Competition in AI Inference

While Nvidia continues to dominate AI model training, the company faces sharper competition in AI inference—the process of delivering model outputs to users at scale. Rivals such as Advanced Micro Devices (AMD) are pushing aggressively into the data centre market, while customers like Alphabet are designing their own chips to reduce costs and gain performance control.

Much of Huang’s keynote focused on how the Rubin platform addresses inference workloads, particularly long, multi-turn conversations in chatbots. Nvidia introduced a new storage layer called context memory storage, designed to keep conversational history closer to the compute layer, enabling faster and more responsive AI interactions.

Networking as a Competitive Battleground

Beyond compute, Nvidia also unveiled a new generation of networking switches built around co-packaged optics, a technology critical for linking thousands of machines into a single, unified AI system. The move places Nvidia in direct competition with networking heavyweights such as Broadcom and Cisco Systems.

Nvidia argues that tighter integration between compute and networking is essential for scaling AI efficiently, and that controlling both layers gives it an edge in building full-stack AI infrastructure.

Early Adopters and Cloud Partnerships

Nvidia said CoreWeave will be among the first to deploy Vera Rubin systems. The company also expects major cloud providers—including Microsoft, Oracle, Amazon, and Alphabet—to adopt the platform as they expand AI infrastructure.

These partnerships are critical to Nvidia’s strategy, as hyperscalers remain the largest buyers of its most advanced chips.

Software, Autonomy, and Open Data

Beyond hardware, Huang highlighted new software aimed at autonomous driving, including a system called Alpamayo that helps self-driving cars explain and log their decision-making processes. Nvidia plans to release the software more broadly, along with the data used to train it.

“Not only do we open-source the models, we also open-source the data that we use to train those models, because only in that way can you truly trust how the models came to be,” Huang said.

Strategic Moves and Geopolitical Pressures

Last month, Nvidia quietly acquired talent and chip technology from startup Groq, including executives who previously helped Google design its AI chips. While Huang said the deal would not affect Nvidia’s core business, it could lead to new products that expand its portfolio.

At the same time, Nvidia is navigating geopolitical complexity. Older chips such as the H200—now permitted for export to China—remain in high demand, even as the company seeks approval from US and other governments to ship them. Nvidia executives confirmed that licensing applications are pending.

As Nvidia rolls out Vera Rubin, the message from CES was clear: the company is betting that tighter integration across compute, networking, and software will keep it ahead in an AI market that is becoming more competitive, more geopolitical, and far more strategic by the year.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)