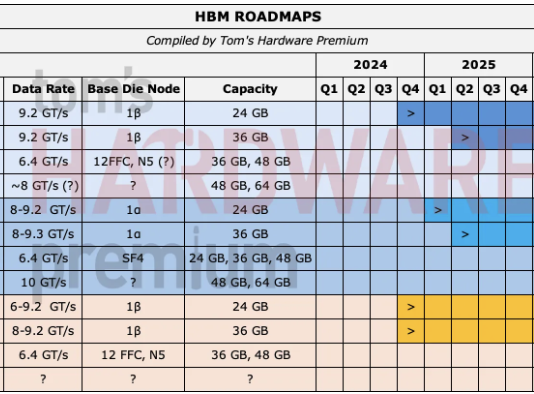

Tom’s Hardware Premium Roadmaps

icture data-new-v2-image=”true”>

icture>

DeepSeek has released a new technical paper, which details a new method for how new AI models might rely on a queryable database of information committed to system memory. Named “Engram”, the conditional memory-based technique achieves demonstrably higher performance in long-context queries by committing sequences of data to static memory. This eases the reliance on reasoning for AI models, allowing the GPUs to only handle more complex tasks,…

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)