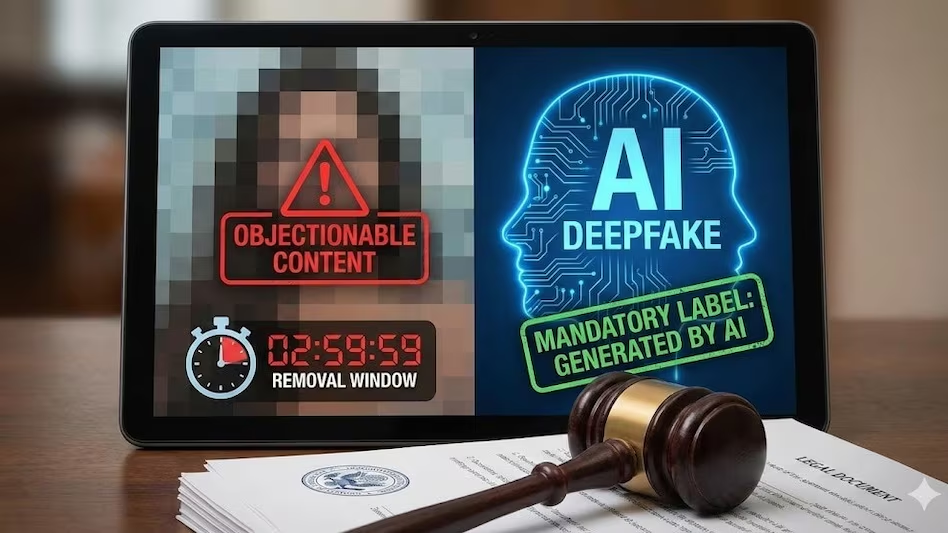

India has directed social media platforms to remove deepfakes more quickly, reinforcing enforcement of existing IT rules amid rising synthetic media risks.

Synthetic media is moving faster than regulation—and governments are trying to catch up.

India has ordered social media platforms to accelerate the removal of deepfake content, citing growing concerns about misinformation, harassment, and election-related manipulation. The directive reinforces existing obligations under India’s IT rules, which require platforms to act promptly against unlawful content.

The renewed push reflects mounting political and public pressure as generative AI tools become widely accessible.

Enforcement, not new legislation

Rather than introducing new laws, authorities are emphasizing stricter enforcement of current intermediary liability rules.

Platforms are expected to respond quickly once flagged and ensure that manipulated media violating policy or law is removed without delay.

Officials have previously warned that non-compliance could attract penalties.

Deepfakes as a governance challenge

Deepfakes present unique regulatory challenges: they can be created cheaply, spread rapidly, and often blur the line between parody and deception.

In India, viral manipulated videos have sparked public outrage and political controversy, raising concerns about their potential impact during elections and social unrest.

The government’s latest order signals that platforms will be held accountable for response times, not just content standards.

Global pattern of tightening rules

India’s directive aligns with global efforts to address synthetic media risks. Governments in Europe and the United States are exploring labeling mandates, transparency requirements, and penalties for malicious use.

However, enforcement capacity often lags technological innovation.

Platforms face pressure to deploy more advanced detection systems while balancing free expression.

The compliance burden grows

For social media companies operating in India—a market with hundreds of millions of users—the directive increases operational complexity.

Faster takedowns require automated detection tools, expanded moderation teams, and clearer escalation pathways.

These measures carry both financial costs and reputational risks if applied inconsistently.

The broader AI policy debate

India has positioned itself as a supporter of AI innovation while simultaneously tightening digital governance.

The deepfake directive illustrates that dual approach: encourage technological growth, but intervene where harms escalate.

As generative AI tools become mainstream, regulators are moving from reactive statements to operational demands.

The next phase will test whether platforms can scale moderation at the speed of synthetic media creation.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)