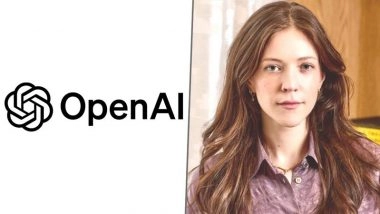

An OpenAI policy executive who reportedly opposed the rollout of an “adult mode” chatbot feature has been dismissed following a sexual discrimination claim, according to media reports.

Leadership tensions inside major AI companies are increasingly spilling into public view.

A senior policy executive at OpenAI has been fired following a sexual discrimination claim, according to reporting by The Wall Street Journal. The executive had reportedly opposed the development of an “adult mode” for the company’s chatbot products.

The dismissal comes at a time when AI firms are navigating internal debates over content boundaries, governance structures, and commercial pressures tied to rapid product expansion.

A governance flashpoint

The controversy centers on OpenAI’s reported consideration of an adult-oriented chatbot mode — a feature that would potentially relax content restrictions under controlled settings.

The executive had allegedly raised objections to the proposal, arguing against expanding into sexually explicit domains. The reported termination followed a claim of sexual discrimination, though full details of the internal proceedings have not been publicly disclosed.

OpenAI has not publicly released a comprehensive statement detailing the reasoning behind the dismissal beyond acknowledging personnel changes.

Balancing safety and growth

AI companies are increasingly confronted with difficult trade-offs between product experimentation and safety frameworks.

Chatbot platforms have historically enforced strict content policies, particularly around explicit material. However, user demand, competitive pressures, and monetization strategies are prompting some firms to revisit content boundaries.

Internal disagreement over these decisions reflects broader tension in the AI industry: whether commercial expansion risks diluting safety commitments.

For OpenAI, which has positioned itself as a leader in responsible AI development, governance disputes carry reputational implications beyond routine executive turnover.

Workplace and legal considerations

Sexual discrimination claims in technology firms often trigger internal investigations and legal scrutiny. Without additional public documentation, it remains unclear whether the claim was tied directly to policy disagreements, workplace conduct, or broader organizational dynamics.

The episode highlights the growing complexity of managing AI companies at scale, where product direction intersects with cultural and ethical debates.

Industry-wide implications

The AI sector is evolving rapidly, with product teams, policy leaders, and executives frequently navigating uncharted regulatory and social terrain.

As AI systems become more integrated into daily life, debates over content moderation, permissible use cases, and user protections are intensifying.

For policymakers and investors, governance stability is increasingly viewed as a core risk factor in AI companies.

Leadership transitions linked to safety or policy disagreements may signal internal recalibration — or deeper structural tensions — depending on how companies respond publicly and operationally.

In the near term, attention will likely focus on whether OpenAI adjusts its content strategy or governance framework following the executive’s departure.

As generative AI expands into new domains, the industry’s internal debates are becoming as consequential as its technological breakthroughs.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)