Samsung Electronics shares climbed to a record high after reports suggested progress in developing HBM4, the next generation of high-bandwidth memory designed for AI workloads. The development highlights how memory innovation has become central to the AI infrastructure race.

High-bandwidth memory is critical for feeding large AI models with data at scale.

Why HBM4 matters

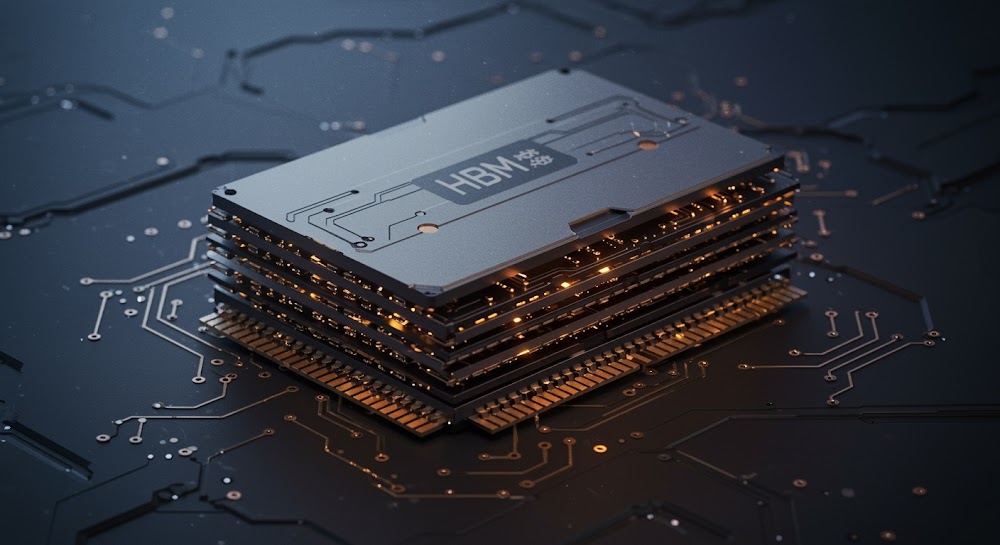

High-bandwidth memory (HBM) enables faster data transfer between memory and processors, particularly GPUs used for AI training and inference.

HBM4 is expected to offer:

- Greater data throughput

- Improved energy efficiency

- Higher stack density

- Reduced latency

These improvements directly influence AI system performance, especially for large language models and generative AI applications.

AI infrastructure demand surge

Global AI adoption has driven intense demand for:

- GPUs

- Advanced packaging technologies

- High-performance memory modules

Memory suppliers have emerged as pivotal players in the AI hardware supply chain.

Record share prices reflect investor belief that HBM4 could strengthen Samsung’s competitive positioning.

Competitive semiconductor landscape

The AI memory segment is highly competitive.

Manufacturers race to secure contracts with:

- Hyperscale cloud providers

- AI research labs

- Advanced chip designers

HBM4 development represents both a technical milestone and a strategic market signal.

Faster commercialization timelines could translate into supply advantages.

Financial market implications

Technology stocks tied to AI hardware have seen strong performance amid rising capital expenditure by cloud providers.

A record high for Samsung suggests:

- Investor confidence in execution

- Expectations of sustained AI chip demand

- Belief in margin expansion from premium memory products

Semiconductor cycles remain volatile, but AI-driven demand has created structural tailwinds.

Broader industry impact

Memory bandwidth increasingly constrains AI system scalability.

Improved HBM technology can:

- Reduce bottlenecks in model training

- Enhance inference speed

- Lower energy consumption per computation

Such advances influence the economics of AI deployment.

Long-term outlook

HBM4 remains under development, and commercial ramp-up timelines will determine revenue impact.

However, capital markets appear to be pricing in sustained AI infrastructure growth.

As AI models grow larger, memory performance becomes as crucial as processing power.

Samsung’s share surge underscores a broader shift:

In the AI era, memory is strategic.

And the race to supply next-generation bandwidth is reshaping semiconductor leadership.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)