Summary

A reported agreement between Nvidia and Groq underscores the accelerating demand for specialized AI computing. The move reflects how chipmakers and startups are aligning to meet enterprise-scale inference and data center needs.

Introduction

The artificial intelligence hardware market is evolving beyond training-focused systems. As real-world AI applications scale, inference workloads are becoming just as critical. The reported Nvidia–Groq deal illustrates how established chip leaders and emerging startups are collaborating to address this shift.

What the Nvidia–Groq Deal Signals

Focus on AI Inference

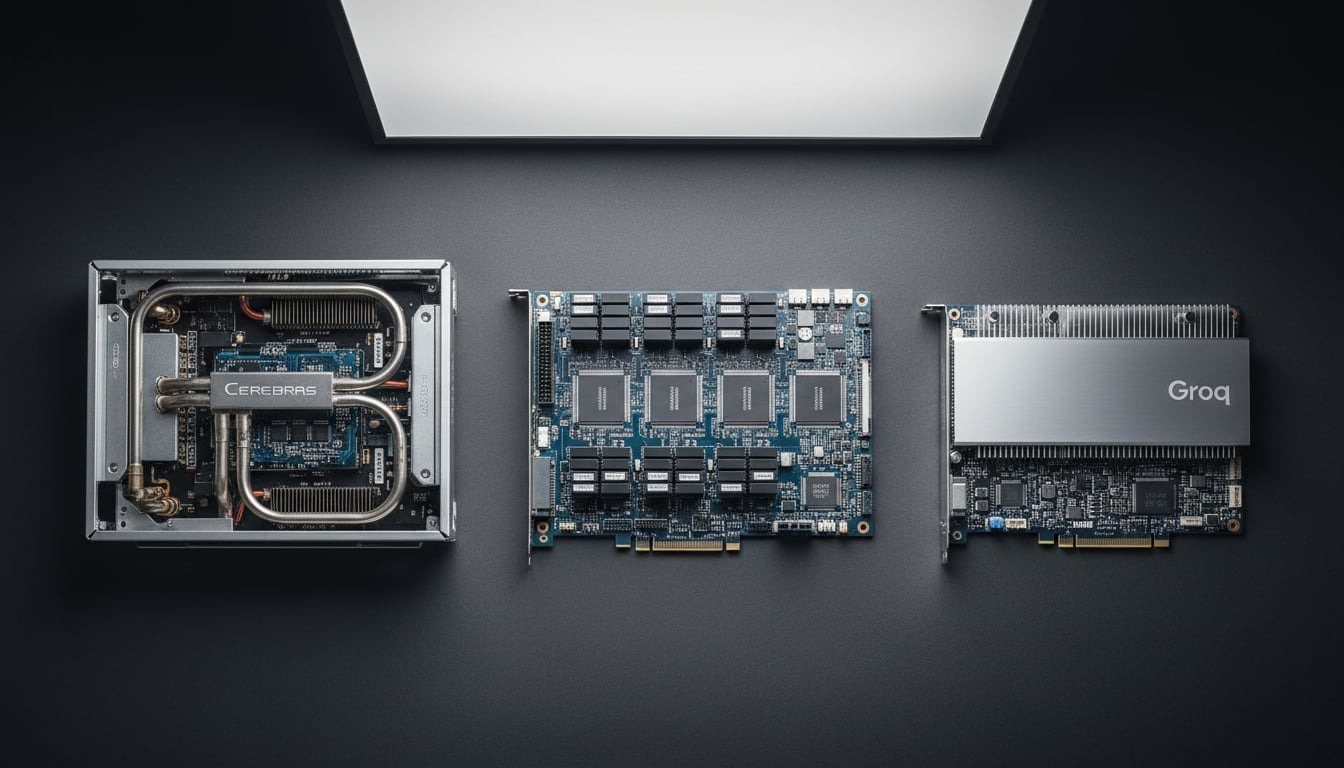

- Groq is known for its inference-optimized hardware architecture.

- Its chips are designed for low-latency, predictable performance in production AI environments.

- The deal highlights growing enterprise demand for faster and more efficient inference solutions.

Nvidia’s Strategic Positioning

- Nvidia continues to dominate AI training workloads.

- Partnering with inference-focused players strengthens its broader AI ecosystem.

- The strategy supports end-to-end AI deployment, from model training to real-time usage.

Impact on the AI Hardware Market

Enterprise Adoption Trends

- Companies are diversifying chip suppliers to reduce bottlenecks.

- Inference performance, power efficiency, and cost predictability are becoming key buying factors.

- Data centers are prioritizing hardware that supports scalable AI services.

Competitive Dynamics

- Nvidia remains the market leader in AI accelerators.

- Startups like Groq are gaining attention by solving specific performance challenges.

- The collaboration highlights a more modular and partnership-driven AI hardware ecosystem.

Broader Industry Implications

Data Center Infrastructure

- AI workloads are reshaping data center design and power planning.

- Inference-heavy applications such as chatbots, search, and real-time analytics are driving hardware demand.

Innovation Acceleration

- Partnerships between large incumbents and startups can speed up deployment of new technologies.

- Enterprises benefit from faster access to optimized AI solutions.

Conclusion

The reported Nvidia–Groq deal reflects a broader shift in the AI computing landscape. As inference workloads grow alongside training, collaboration between established chipmakers and specialized startups is becoming essential. This trend is likely to shape how AI infrastructure is built and deployed across data centers in the coming years.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)