Artificial intelligence is entering classrooms at a speed few educators expected. From AI-powered tutoring tools to automated grading systems, schools across the world are experimenting with technologies that promise efficiency, personalization, and cost savings. But a new report suggests the risks of bringing AI into schools may be greater than many policymakers and education leaders are willing to admit.

The report, highlighted by NPR, argues that the rapid adoption of AI in education is happening without sufficient evidence that it improves learning outcomes. Instead, researchers warn that AI could weaken critical thinking, deepen inequality, and shift control of education toward private technology companies with limited accountability.

As school systems face budget pressures and staffing shortages, the appeal of AI is understandable. However, the report urges caution, warning that the long-term consequences for students, teachers, and society may be profound.

Why AI Is Spreading So Quickly in Schools

AI tools are being marketed to schools as solutions to some of education’s most persistent problems. Vendors promise personalized learning experiences, faster feedback, reduced teacher workloads, and data-driven insights into student performance.

Many school districts are under intense pressure to improve test scores, manage large class sizes, and address learning gaps worsened by the pandemic. In this context, AI is often presented as a neutral, scalable fix.

However, the report emphasizes that speed has outpaced understanding. Schools are adopting AI tools faster than researchers can study their impact, and faster than educators can develop safeguards.

The Core Concern: Learning Quality

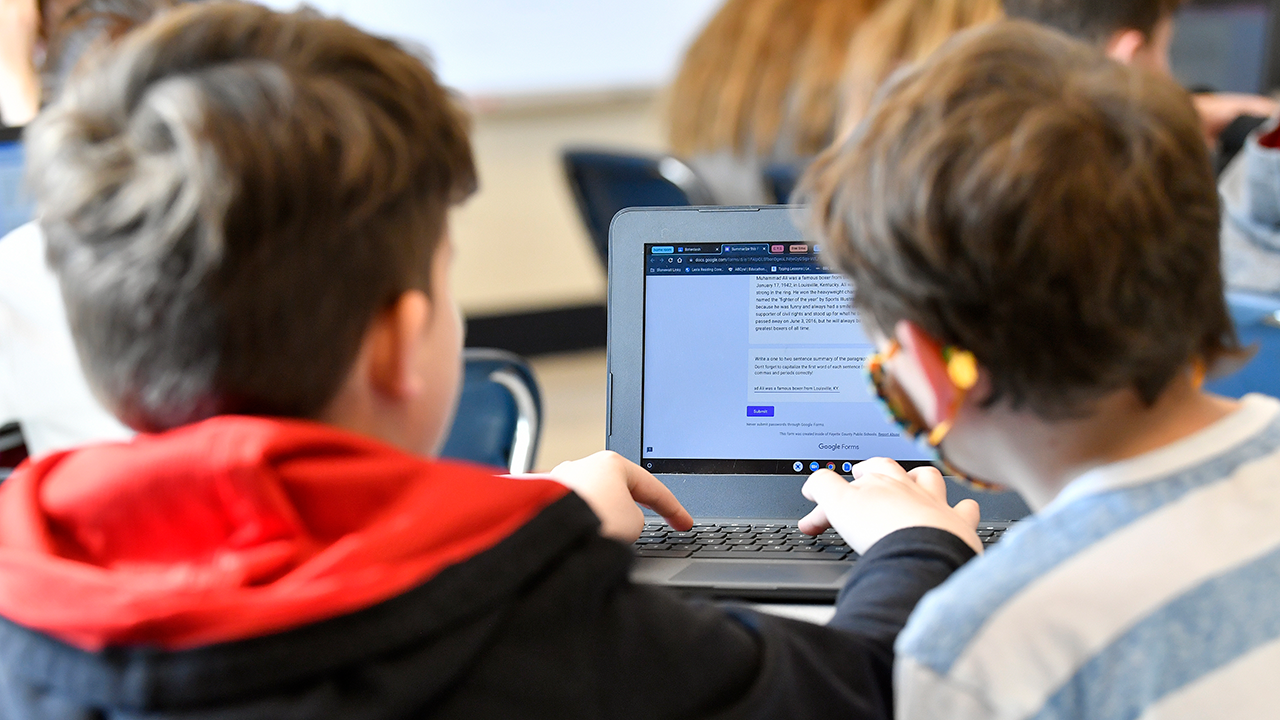

One of the central arguments in the report is that AI risks changing how students learn in ways that may be harmful. Tools that generate answers, summarize texts, or solve problems can reduce the need for students to struggle through complex material.

Educational research consistently shows that learning happens through effort, mistakes, and revision. When AI shortcuts that process, students may complete assignments without developing deep understanding.

Teachers interviewed for the report expressed concern that AI encourages surface-level engagement. Students may appear productive, but their ability to think critically, write independently, or solve problems unaided could decline over time.

Dependence on AI and Skill Erosion

Another risk highlighted is overreliance. When students regularly use AI to assist with writing, math, or research, they may lose confidence in their own abilities.

The report warns that this dependency could erode foundational skills, particularly in younger students whose cognitive development is still underway. Writing, reading comprehension, and reasoning are not just academic skills; they shape how people think.

If AI becomes a default crutch, students may struggle later in environments where such tools are unavailable or inappropriate, including exams, workplaces, or higher education settings with strict integrity standards.

Inequality and the Digital Divide

While AI is often promoted as a tool for equity, the report argues it may actually widen existing gaps. Well-funded schools can afford high-quality AI tools, training, and oversight. Under-resourced schools may rely on cheaper or poorly tested systems.

Students with access to better AI tools may gain advantages in speed and polish, while others fall behind. At the same time, families with more resources can supplement AI use with human support, such as tutoring, mitigating potential downsides.

The report stresses that technology does not operate in a vacuum. Without strong policy and investment, AI can amplify inequality rather than reduce it.

Data Privacy and Student Surveillance

AI systems in schools rely on data. That data often includes sensitive information about students’ behavior, performance, and even emotional states.

The report raises concerns about how this data is collected, stored, and used. Many AI education tools are developed by private companies whose business models depend on data extraction.

Parents and educators may have limited visibility into how student data is handled or whether it could be repurposed for commercial use. In some cases, students may be effectively surveilled throughout their school day without meaningful consent.

Accountability and Transparency

Unlike traditional teaching methods, AI systems are often opaque. Even developers may not fully understand how certain algorithms reach their conclusions.

This lack of transparency poses challenges for schools. If an AI tool mislabels a student as underperforming, biased, or at risk, it can influence how teachers and administrators treat that student.

The report warns that outsourcing judgment to algorithms can weaken professional accountability. When decisions are made by systems rather than people, it becomes harder to challenge errors or biases.

Impact on Teachers and the Profession

AI is frequently framed as a way to support teachers, but the report cautions that it could also undermine the profession. Automated grading and lesson planning tools may reduce teachers’ autonomy and professional judgment.

There is also concern that AI could be used as a justification for larger class sizes or reduced staffing. While AI may assist with certain tasks, it cannot replace the relational and emotional aspects of teaching.

Teachers interviewed for the report emphasized that education is not just about delivering content. It is about mentoring, motivation, and responding to human complexity, areas where AI remains limited.

Commercial Influence in Education

Another risk highlighted is the growing influence of technology companies in shaping education policy. As schools adopt AI tools, vendors gain leverage over curricula, assessment methods, and classroom practices.

The report warns that profit-driven incentives may not align with educational goals. Companies are rewarded for scale and engagement, not necessarily for long-term learning outcomes.

This raises questions about who controls the future of education and whose interests are prioritized when AI becomes embedded in classrooms.

The Evidence Gap

Perhaps the most striking finding of the report is how little evidence exists to support many claims about AI’s benefits in education. While pilot studies and anecdotal success stories are common, rigorous, long-term research is scarce.

Education outcomes are complex and influenced by many factors. Isolating the impact of AI is difficult, yet policies are being shaped by optimism rather than proof.

The report calls for more cautious experimentation, with clear evaluation frameworks and the ability to withdraw tools that do not deliver measurable benefits.

Global Relevance (GEO Section)

The concerns raised about AI in schools are relevant across the USA, UK, UAE, Germany, Australia, and France. Education systems in all these regions are exploring AI to address teacher shortages, rising costs, and learning gaps. At the same time, debates around student privacy, digital equity, and the role of private technology companies are intensifying worldwide. As AI adoption accelerates globally, the risks identified in the report reflect shared challenges faced by education systems across borders.

What Policymakers and Schools Are Being Urged to Do

Rather than banning AI outright, the report advocates for restraint and stronger governance. Schools are encouraged to prioritize human-centered education, treat AI as a supplementary tool, and establish clear limits on its use.

Training for teachers, transparency for parents, and protections for student data are emphasized as essential prerequisites. Without these safeguards, the report warns that AI could reshape education in ways that are difficult to reverse.

A Moment of Decision for Education

The rapid spread of AI in schools reflects broader technological momentum. Once tools become normalized, rolling them back becomes politically and practically difficult.

The report argues that this moment represents a critical decision point. Schools can choose to integrate AI slowly and thoughtfully, or they can allow market forces to drive adoption with limited oversight.

The path chosen will shape not just how students learn, but how society defines education in an age of intelligent machines.

Closing Perspective

AI has undeniable potential, but the report makes clear that potential alone is not enough. Education is a foundational institution, and changes to how students learn have long-lasting consequences.

By highlighting the risks alongside the promised benefits, the report challenges the assumption that technological progress is always positive. It calls for humility, evidence, and a renewed focus on human learning in an increasingly automated world.

In simple terms, a new report warns that while AI tools are spreading quickly in schools, they may undermine learning, worsen inequality, and create long-term risks that outweigh their short-term benefits.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)