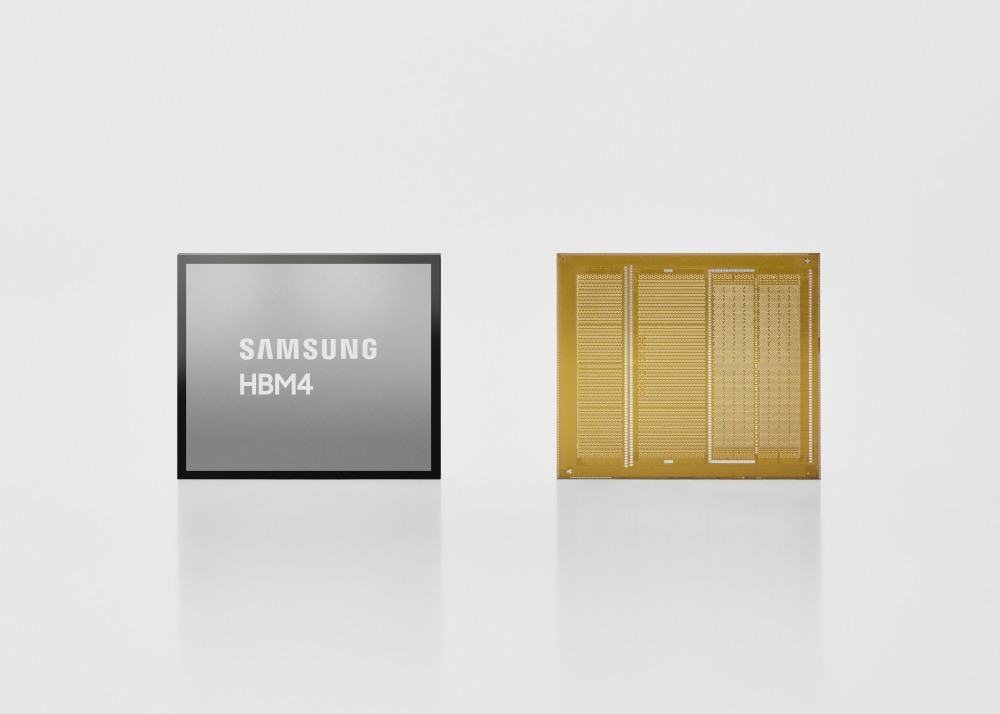

Samsung Electronics has started shipping what it describes as the industry’s first HBM4 memory, targeting AI data centers that require higher bandwidth and energy efficiency.

The AI infrastructure race is increasingly a memory story.

Samsung said it has begun shipping High Bandwidth Memory 4 (HBM4), positioning the company at the forefront of next-generation memory designed to power AI accelerators and large-scale data centers.

HBM plays a critical role in AI workloads, enabling rapid data transfer between processors and memory stacks. As models grow larger and inference workloads intensify, demand for high-performance memory has surged.

Why HBM4 matters

HBM4 represents the latest generation in stacked memory architecture, offering improvements in bandwidth, capacity, and power efficiency over previous versions.

AI training and inference systems rely on massive parallel processing. Bottlenecks often emerge not from compute limits alone but from memory bandwidth constraints. High-performance memory solutions are therefore becoming strategic assets in the semiconductor value chain.

Samsung’s move comes amid intense competition in advanced memory, where supply constraints have previously driven price volatility and reshaped vendor positioning.

Strategic positioning in the AI cycle

The launch signals Samsung’s intent to reinforce its role as a core supplier in AI infrastructure buildouts. Cloud providers and AI chipmakers are rapidly scaling deployments, requiring sustained volumes of advanced memory.

The broader semiconductor ecosystem is undergoing structural change as AI spending shifts from experimental to production-scale deployments. Memory suppliers that can deliver at volume and with yield efficiency stand to benefit disproportionately.

For AI chip startups and hyperscalers, access to next-generation HBM is becoming a gating factor for performance differentiation.

Industry impact

The memory market has historically been cyclical. However, AI-driven demand is introducing structural demand components tied to data center expansion and sovereign AI initiatives.

Samsung’s early shipment of HBM4 suggests confidence in manufacturing readiness and demand visibility. It may also intensify competitive dynamics across advanced memory suppliers.

As AI workloads scale across training, inference, and edge deployments, memory innovation is emerging as a central lever of infrastructure performance.

For investors and industry operators alike, the message is clear: the AI race is not only about GPUs and models. It is increasingly about the silicon layers beneath them.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)