Deepgram has made a name for itself as one of the go-to startups for voice recognition. Today, the well-funded company announced the launch of Aura, its new real-time text-to-speech API. Aura combines highly realistic voice models with a low-latency API to allow developers to build real-time, conversational AI agents. Backed by large language models (LLMs), these agents can then stand in for customer service agents in call centers and other customer-facing situations.

As Deepgram co-founder and CEO Scott Stephenson told me, it’s long been possible to get access to great voice models, but those were expensive and took a long time to compute. Meanwhile, low latency models tend to sound robotic. Deepgram’s Aura combines human-like voice models that render extremely fast (typically in well under half a second) and, as Stephenson noted repeatedly, does so at a low price.

“Everybody now is like: ‘hey, we need real-time voice AI bots that can perceive what is being said and that can understand and generate a response — and then they can speak back,’” he said. In his view, it takes a combination of accuracy (which he described as table stakes for a service like this), low latency and acceptable costs to make a product like this worthwhile for businesses, especially when combined with the relatively high cost of accessing LLMs.

Deepgram argues that Aura’s pricing currently beats virtually all its competitors at $0.015 per 1,000 characters. That’s not all that far off Google’s pricing for its WaveNet voices at 0.016 per 1,000 characters and Amazon’s Polly’s Neural voices at the same $0.016 per 1,000 characters, but — granted — it is cheaper. Amazon’s highest tier, though, is significantly more expensive.

“You have to hit a really good price point across all [segments], but then you have to also have amazing latencies, speed — and then amazing accuracy as well. So it’s a really hard thing to hit,” Stephenson said about Deepgram general approach to building its product. “But this is what we focused on from the beginning and this is why we built for four years before we released anything because we were building the underlying infrastructure to make that real.”

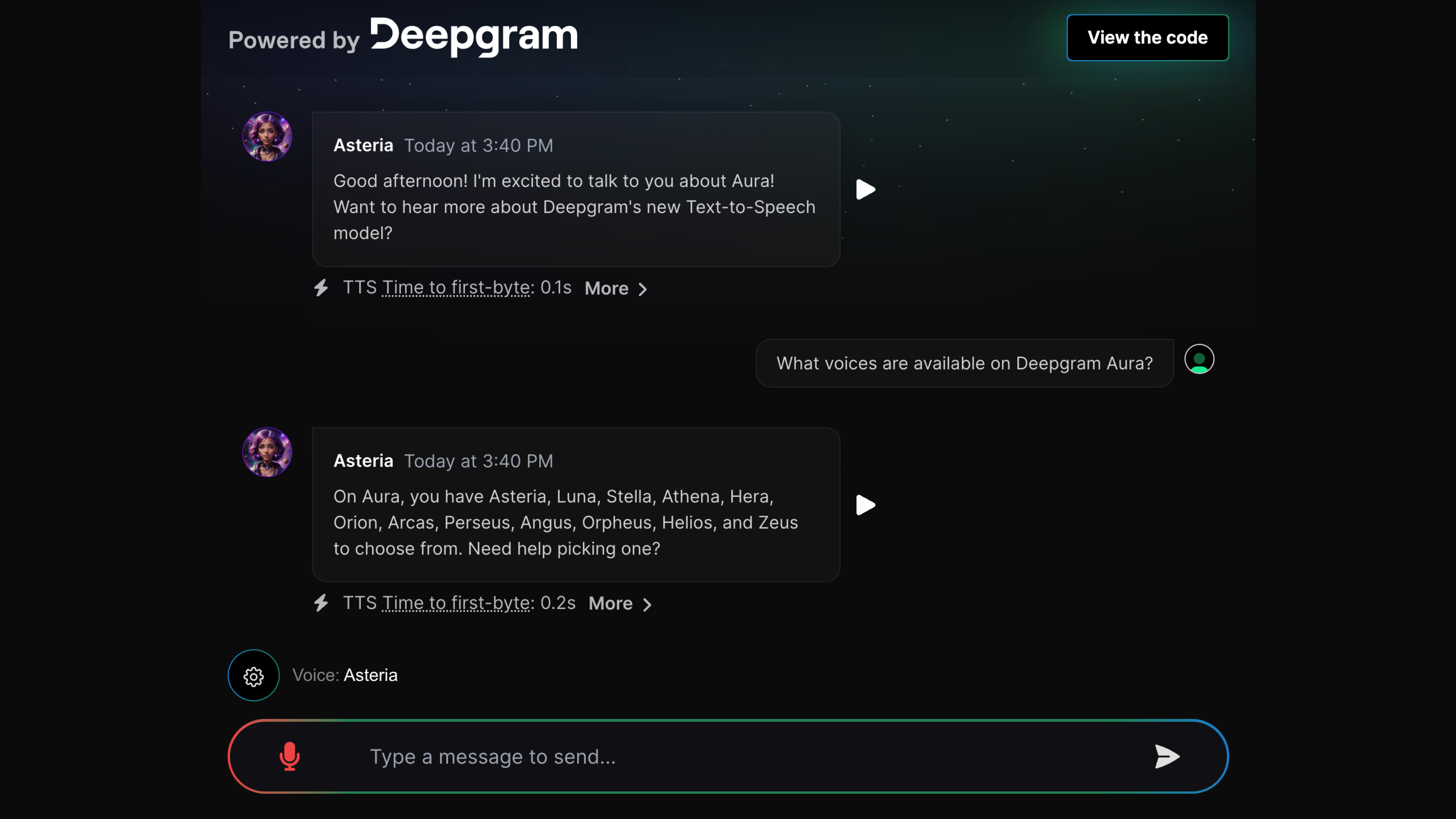

Aura offers around a dozen voice models at this point, all of which were trained by a dataset Deepgram created together with voice actors. The Aura model, just like all of the company’s other models, were trained in-house. Here is what that sounds like:

udio class=”wp-audio-shortcode” id=”audio-2677870-1″ preload=”none” style=”width: 100%;” controls=”controls”>

You can try a demo of Aura here. I’ve been testing it for a bit and even though you’ll sometimes come across some odd pronunciations, the speed is really what stands out, in addition to Deepgram’s existing high-quality speech-to-text model. To highlight the speed at which it generates responses, Deepgram notes the time it took the model to start speaking (generally less than 0.3 seconds) and how long it took the LLM to finish generating its response (which is typically just under a second).

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)