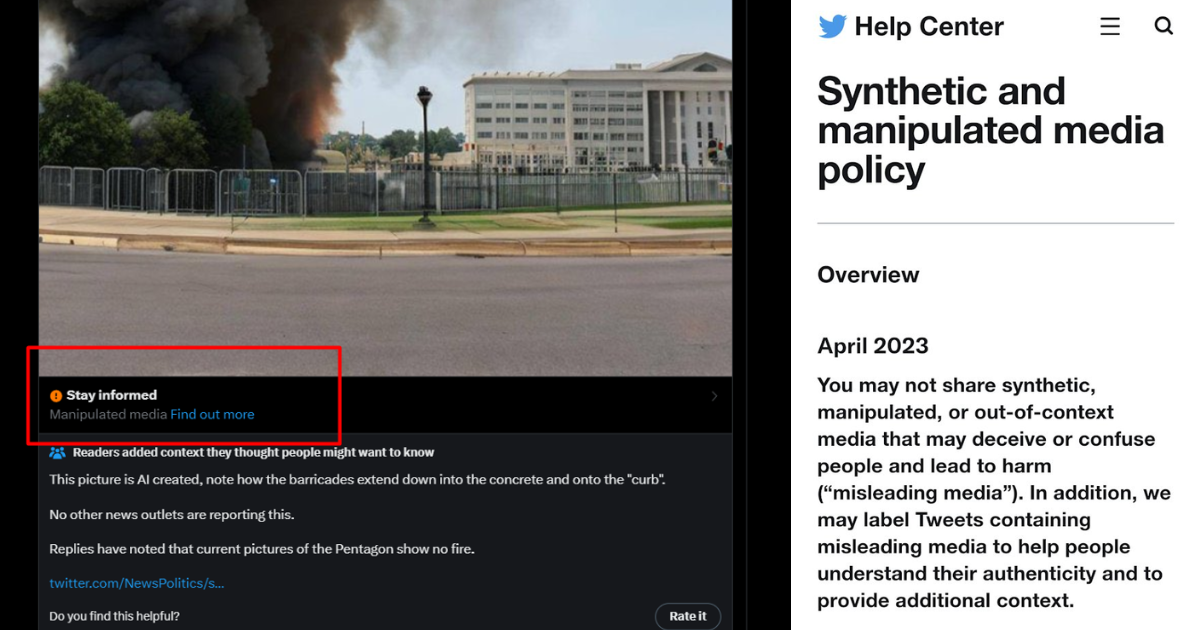

Twitter has taken a significant step in combating the spread of misinformation and manipulated media. The company has introduced a new policy that involves labelling tweets that contain AI-generated pictures and videos, specifically when these visuals have the potential to cause widespread confusion on public issues.

In an era where deepfakes and manipulated media have become increasingly sophisticated, Twitter’s decision to implement this policy demonstrates its commitment to promoting transparency and preserving the integrity of public discourse. By clearly labelling AI-generated content, users will be better equipped to identify potentially misleading or false information, thereby fostering a more informed online environment.

The move comes as part of Twitter’s ongoing efforts to tackle the spread of misinformation and disinformation on its platform. The company has been proactive in implementing policies and features to combat the manipulation of media, including the introduction of labels for manipulated photos and videos.

Twitter’s decision to label AI-generated content reflects the platform’s recognition of the potential harm that such media can cause. By proactively flagging these types of visuals, Twitter aims to empower users to make more informed judgments and engage in discussions based on reliable information.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)