At its Advancing AI event, AMD has released Instinct MI300X accelerators, boasting an industry leading bandwidth of generative AI, along with Instinct MI300A accelerated processing unit (APU), combined with the latest AMD CDNA 3 architecture and Zen 4 CPUs – all focused for HPC and AI workloads.

Customers including Microsoft have already announced that it is leveraging AMD Instinct accelerator portfolio in the recently released Azure ND MI300x v5 Virtual Machine (VM) series, which is specifically optimised for IA workloads. Meta is also adding MI300X accelerators to its data centres.

OpenAI is also adding support for AMD Instinct accelerators to Triton 3.0, providing support for AMD accelerators that will allow developers to test their models.

Moreover, Oracle Cloud Infrastructure (OCI) is planning to add MI300X-based bare metal instances into its high-performance accelerated computing systems. This will support OCI Supercluster with ultrafast RDMA networking.

Furthermore, Dell showcased at the event its Dell PowerEdge XE9680 server, which features eight AMD Instinct MI300 series accelerators along with AMD ROCm-powered AI frameworks.

Read: AMD Eyes Big Wins with MI300X for AI Workloads

Not just Dell, HPE and Lenovo are also all into the AMD Instinct ecosystem. HPE announced Cray Supercomputing EX255a, its first supercomputing accelerator blade, which is powered by Instinct MI300A APUs. These will be available by early 2024.

Lenovo has announced its design support for MI300 series accelerators, which planned availability from the first half of 2024. Supermicro also announced the H13 generation of accelerated servers, which would be powered by the 4th Gen AMD EPYC CPUs and MI300 accelerators.

“AMD Instinct MI300 Series accelerators are designed with our most advanced technologies, delivering leadership performance. These accelerators will be utilised in large-scale cloud and enterprise deployments,” said Victor Peng, President of AMD. “By leveraging our leadership hardware, software, and open ecosystem approach, cloud providers, OEMs, and ODMs are bringing to market technologies that empower enterprises to adopt and deploy AI-powered solutions.”

Gigabytes, Inventec, QCT, Ingensys, and Wistron also announced their plans to offer solutions powered by the new AI accelerators.

Other specialised cloud providers including Aligned, Arkon Energy, Cirrascale, Crusoe, Denver Dataworks, and Tensorwaves are all going to incorporate and expand access to MI300X GPUs for developers and AI startups.

Additionally, El Capitan, an exascale supercomputer in Lawrence Livermore National Laboratory is also hosting an unannounced number of MI300s already, building on the hype of the release. It is expected to deliver two exaflops of double precision performance when fully deployed.

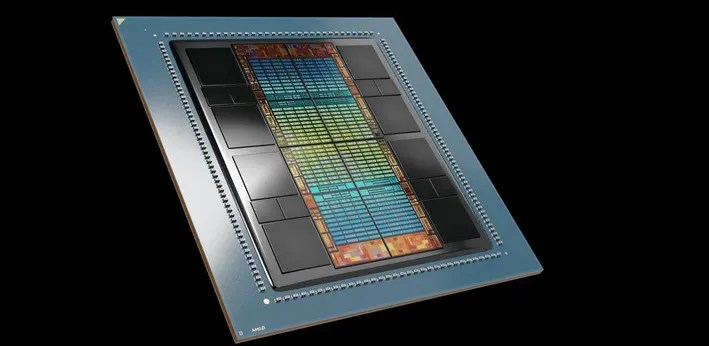

Specifications of MI300X

The AMD Instinct MI300X accelerators are driven by the new AMD CDNA 3 architecture. In comparison to the previous generation AMD Instinct M1250X accelerators, the MI300X has nearly 40% more compute units, 1.5 times more memory capacity, and 1.7 times more peak theoretical memory bandwidth. Additionally, the MI300X supports new maths formats such as FP8, specifically designed for AI and HPC workloads.

The accelerators offer extensive memory and compute capabilities. Featuring HBM3 memory with 5.3 TB/s peak bandwidth and a best-in-class 192 GB of bandwidth, these accelerators cater to the demanding nature of AI workloads.

The AMD Instinct Platform, constructed on an industry-standard OCP design, incorporates eight MI300X accelerators, providing a total of 1.5TB of HBM3 memory capacity. This platform enables OEM partners to seamlessly integrate M1300X accelerators into existing AI offerings.

In comparison to the NVIDIA H100 HGX, the AMD Instinct Platform claims to offer up to a 1.6x increase in performance. This is particularly notable when running inference on LLMs like BLOOM 176B4 and Llama2 with a single M1300X accelerator.

Specifications of MI300A

The AMD Instinct MI300A is an Accelerated Processing Unit (APU) designed specifically for data centre applications, marking a significant advancement as the world’s first APU tailored for HPC and AI workloads.

At its core, the MI300A features high-performance AMD CDNA 3 GPU cores combined with the latest AMD “Zen 4” x86-based CPU cores, forming a potent synergy for computational tasks. With 128GB of next-generation HBM3, the MI300A boasts impressive capabilities, claiming a remarkable ~1.9x improvement in performance-per-watt on HPC and AI workloads compared to its predecessor.

The emphasis on energy efficiency is paramount, particularly given the demands of HPC and AI workloads, which are inherently data-intensive and computationally demanding. By integrating CPU and GPU cores into a single APU, the MI300A achieves a 30x improvement in energy efficiency on FP32, addressing the critical need for power optimisation.

The post AMD Releases MI300X Accelerator, Competing with NVIDIA H100 appeared first on Analytics India Magazine.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)