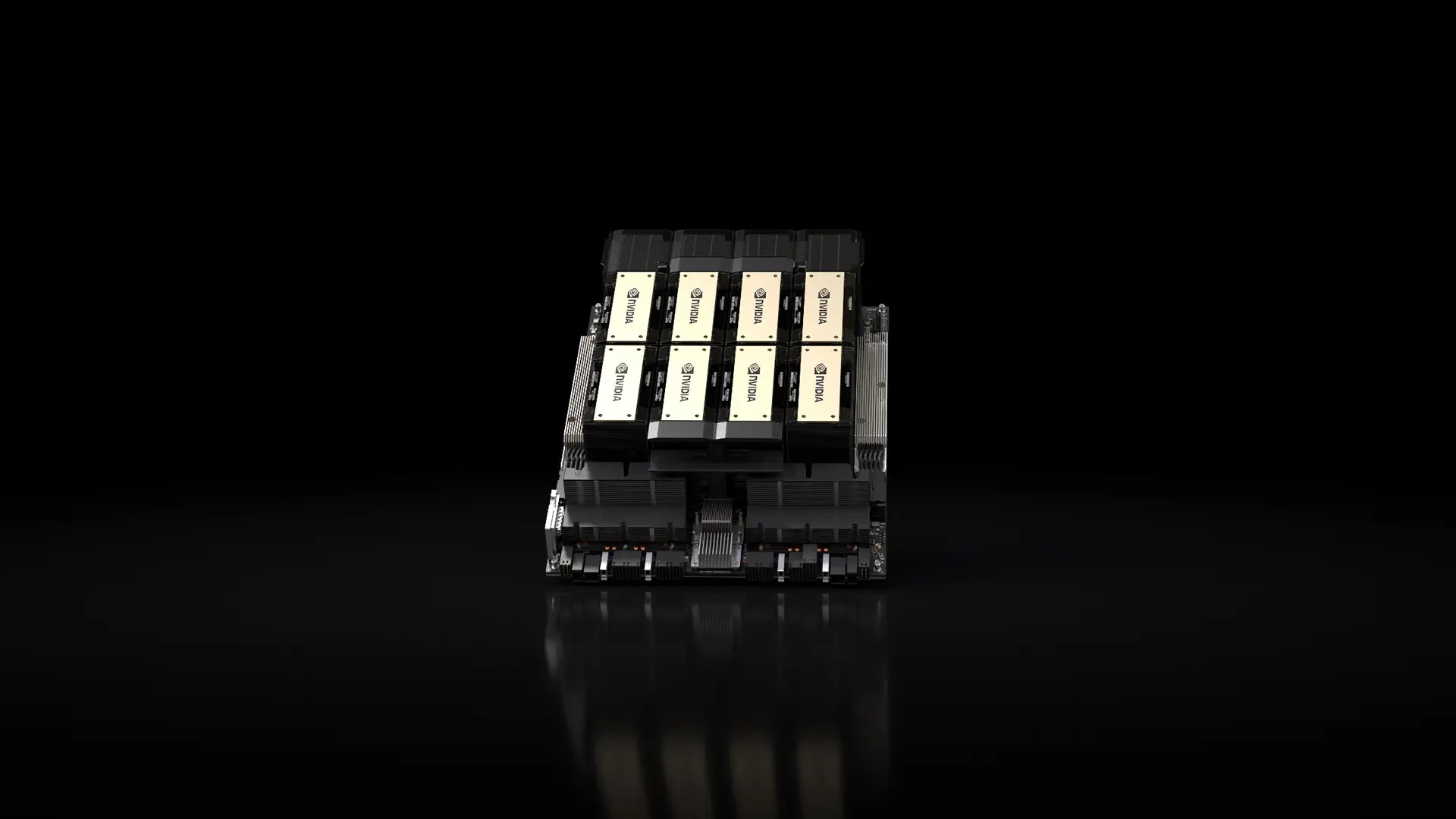

After the successful year with everyone wanting their hands on an H100, NVIDIA is ready with its next-gen GPUs. The company is rolling out GH200 GPU to selected companies that would be testing and deploying models on it.

Most recently, Bindu Reddy, the CEO of Abacus AI, the company that is focused on building generative AI applications and agents at scale, announced on X that it is going to receive GH200 supercomputers today. She said that she would like to focus on open source projects using the AI supercomputers starting January.

She also said that Abacus AI is probably the first of the few companies to receive it.

NVIDIA GH200, the successor of H100, are expected to be available by the end of this year, and systems running on them are expected to start by the second quarter of 2024.

In November, AWS had announced that it would be the first customer to use the NVIDIA GH200 on its cloud. This was after Google Cloud, Meta, and Microsoft were anticipated to be among the early adopters granted permission to utilise the DGX GH200 for investigating its potential in handling generative AI workloads.

Oracle Cloud Infrastructure (OCI) has also announced plans to use the GH200s for its cloud offerings.

NVIDIA also plans to share the DGX GH200 design as a model with cloud service providers and other hyperscalers, allowing them to tailor it to better suit their infrastructure.

On the other hand, Microsoft and Meta have also announced plans to integrate AMD’s recently launched Instinct MI300X accelerators for AI workloads. Meta is going to build its new data centre using MI300X, which AMD directly compares with H100.

Moreover, Intel is also about to announce its Gaudi3 AI accelerator on December 14th at its Intel ‘AI Everywhere’ conference. Intel showcased that Gaudi2, the previous version of its accelerator, is very close to NVIDIA’s H100, and was a cheaper alternative as well.

The post NVIDIA is Rolling Out GH200 for its Customers appeared first on Analytics India Magazine.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)