OpenAI, Microsoft, and Adobe have launched AI chatbots powered by large language models (LLMs) that convert text input into images. Google has released VideoPoet, an LLM that can turn text into videos. To showcase VideoPoet’s capabilities, Google Research produced a short movie composed of clips generated by the model. VideoPoet uses a pre-trained MAGVIT V2 video tokenizer and SoundStream audio tokenizer to transform images, videos, and audio clips into a sequence of discrete codes. These codes are compatible with text-based language models, allowing integration with other modalities.

Companies like OpenAI, Microsoft and Adobe have launched AI chatbots that are powered by specific types of large language models (LLMs) that turn a text input into an image. Google has also been in the fray and it has now taken a step forward by releasing an LLM, called VideoPoet, that can turn text to videos.

To showcase VideoPoet’s capabilities, Google Research has produced a short movie composed of several short clips generated by the model.

How VideoPoet model works

For example, Google explains that for the script, it asked Bard to write a series of prompts to detail a short story about a travelling raccoon. It then generated video clips for each prompt, and when the model stitched together all resulting clips, it prepared a final YouTube Short.

“VideoPoet is a simple modelling method that can convert any autoregressive language model or large language model (LLM) into a high-quality video generator,” Google said.

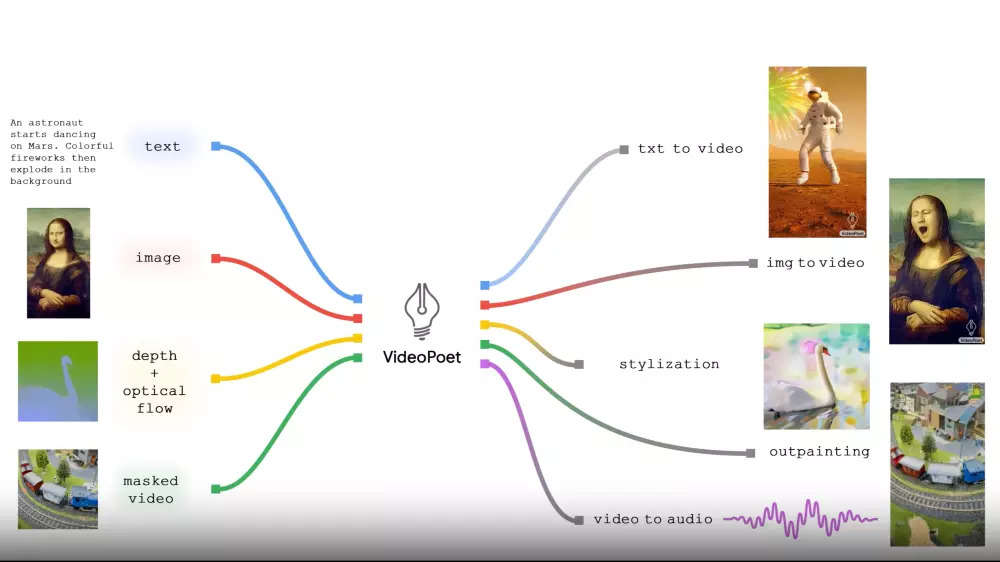

There is a pre-trained MAGVIT V2 video tokenizer and a SoundStream audio tokenizer which transform images, video and audio clips with variable lengths into a sequence of discrete codes in a unified vocabulary.

These codes are compatible with text-based language models, facilitating an integration with other modalities, such as text. The LLM learns modalities to predict the next video or audio token in the sequence.

“A mixture of multimodal generative learning objectives are introduced into the LLM training framework, including text-to-video, text-to-image, image-to-video, video frame continuation, video inpainting and outpainting, video stylisation, and video-to-audio,” the company said, noting that the result is an AI-generated video.

In layman’s words, VideoPoet has multiple separately trained components for different tasks integrated into a single LLM.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)