I’m on record as saying that I think Vision Pro is mostly a stepping-stone toward an eventual Apple Glasses product, and that I think those are still years away yet.

I feel like current glasses tech is giving us these partial peeks into that future. Viture One XR glasses provide one potential take, and the Ray-Ban Meta glasses another – and perhaps suggesting the future may be closer than I thought …

Vision Pro is a stepping stone toward Apple Glasses

We can debate the value of Vision Pro as a product in its own right, but to me that’s not the important thing here, as I’ve said before.

lockquote class=”wp-block-quote is-layout-flow wp-block-quote-is-layout-flow”>

This is a long-term bet by Apple. It scarcely matters how many people want to buy a 2023 device costing several thousand dollars, nor whether a more affordable version launches next year or the year after […]

A Vision Air isn’t even the end-game here. For me, this is really about the eventual development of an Apple Glasses product.

lockquote>

Vision Pro is simply Apple placing a stake in the ground, and expressing its confidence that head-worn devices have a future.

I feel like existing glasses tech is gradually previewing the kinds of experiences we might one day expect from Apple Glasses – and so far, I’m impressed.

Viture Glasses sold me on video consumption

It’s notable how many Vision Pro users are saying that, for them, watching TV shows and movies is the thing they do most often. Joanna Stern said that, once the novelty wore off, this was almost the only thing she still used it for, and I know other owners who’ve found the same.

That doesn’t surprise me. Living in the UK, I haven’t yet had the chance to try Apple’s spatial computer, but I’m 100% sold on face computers (or face monitors) for watching video content.

I’m certainly intrigued about the idea of using glasses as virtual Mac monitors for work, but for me the tech isn’t there yet.

My colleague Filipe Espósito said that his experience of an earlier iteration of Ray-Ban Meta glasses convinced him to believe in smart glasses, and a key reason for this was the convenience of capturing photos and video:

lockquote class=”wp-block-quote is-layout-flow wp-block-quote-is-layout-flow”>

There are some moments that happen so quickly that you don’t even have time to take your phone out of your pocket. And that’s where the Ray-Ban Meta comes in, because they’re already there on your face, ready to be used. With just the click of a button on the right stem, you can capture a photo of what you’re looking at. Press the button for a few seconds and then it captures a video.

lockquote>

I absolutely echo that. Provided it’s bright enough for me to be wearing sunglasses in the first place, it’s just fantastically convenient to be able to either reach up to press or hold a button, or to say “Hey Meta, take a photo” or “Hey Meta, record a video.”

For video in particular, it’s far less intrusive to automatically capture point-of-view footage without interrupting what I’m doing than it is to hold up my phone. Additionally – and importantly – I’m not then ending up viewing the scene through a phone screen instead of my own eyes.

For example, this is me looking around exactly as I would have done had I not been recording:

That’s a very different experience to taking my phone out of my pocket and viewing the scene through that. Similarly enjoying listening to this singer, where the microphones cope surprisingly well.

However, it does trigger a Vertical Video Syndrome alert! Both still photos and video have a fixed 3:4 vertical format.

Additionally, it has to be said that the camera quality for both still photos and video doesn’t come anywhere close to that of my iPhone 15 Pro Max; it’s definitely going back a few years. Here’s a couple of examples – nothing bad, but also not in current iPhone territory (click/tap to view full-size):

To me, it’s like going back in time to when I used a standalone camera for anything serious, and my iPhone for capturing memories. With this, I feel like the glasses would be my default for memories, while I’d pull out my camera when I care more about the quality.

We also get no visual indication of our framing. The ultra-wide lens means we usually won’t miss anything this way, but it is easy to get a wonky horizon, or less than the ideal framing. Additionally, I rarely shoot ultra-wide with my phone, preferring to control what’s in view and what’s not with a tighter view.

Finally, videos are limited to 60 seconds. That’s fine for quick clips while exploring a city, for example, but I’d absolutely love to be able to use these for things like roller-coaster rides. Increasing the limit to say three minutes would make a huge difference.

Put all these factors together – vertical aspect ratio, middling quality, no visual framing, 1-minute video limit – then it’s definitely not something I’d use for more serious use. But for me the killer app is the ease and convenience of capturing POV video for memories. I will absolutely use them for that.

Photos are initially stored in the on-board 32GB flash memory of the glasses themselves. When you want to transfer them to your phone, you do this using the Meta View app, and the glasses create a wifi hotspot for the purpose. It’s very quick and painless.

Audio use: Listening, and voice calls

The arms of the glasses contain tiny speakers. I have to say that I don’t feel like any above-the-ear headphones are good enough for listening to music – for that, I’ll stick to my trusty MW09s – but they definitely work well for podcasts and voice calls. If you do find them good enough for music, though, you can link them to Apple Music or Spotify, and the tap to start/resume is handy. The right arm is also a touchpad allowing you to swipe forward and back for volume up/down – though I found this very insensitive.

But for voice calls, it worked really well, with the microphones also allowing the other party to hear me clearly. In summer in particular, I’m rather glad not to have something in my ear.

I was already sold on Shockz bone-conduction headphones for the comfort factor during calls, and these are even more convenient as everything is in a single device I’d be carrying with me anyway. The Meta glasses aren’t bone-conduction, just tiny, downward-facing speakers, but they work well, and are similarly hard for anyone next to you to hear.

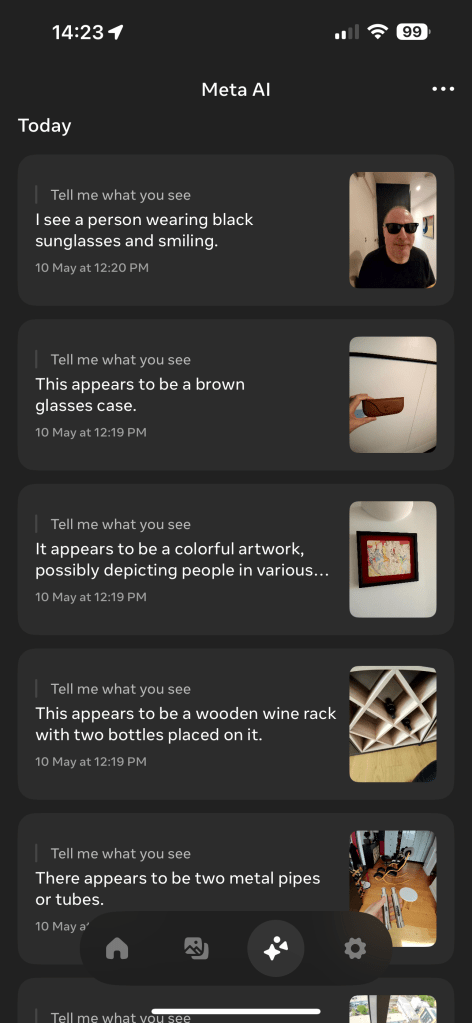

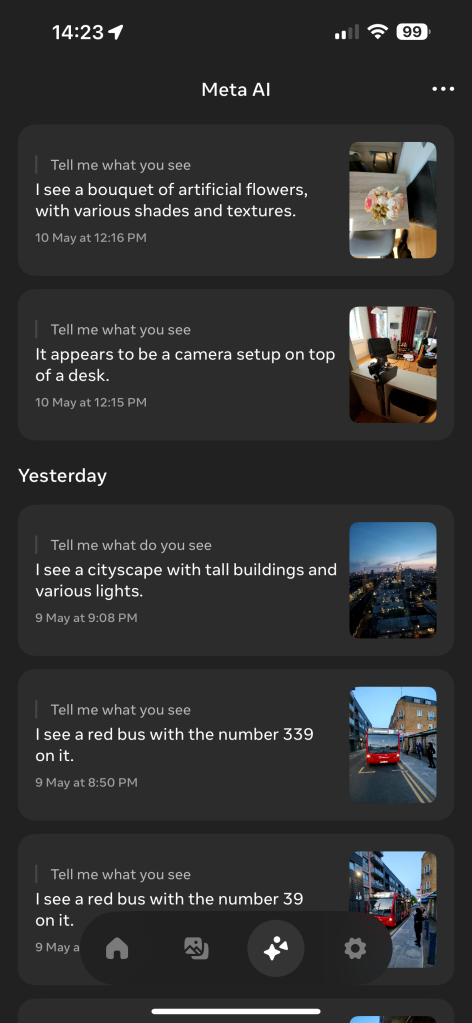

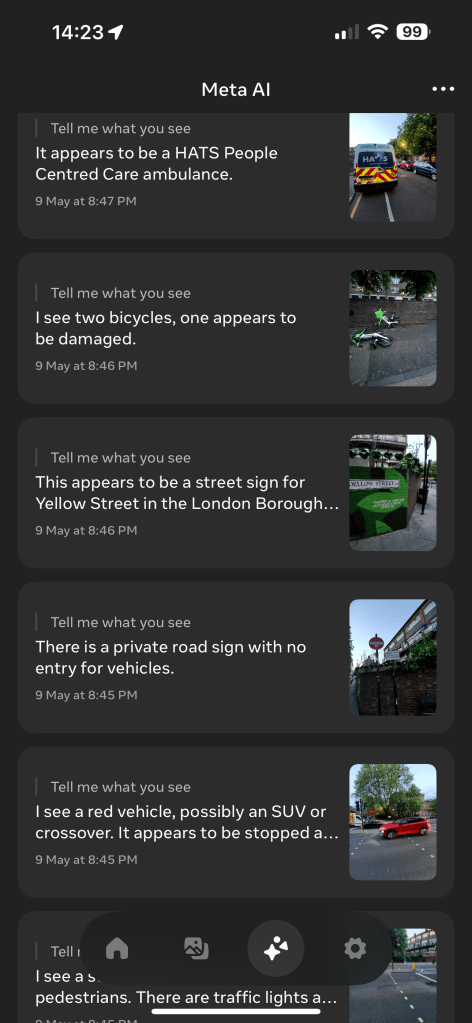

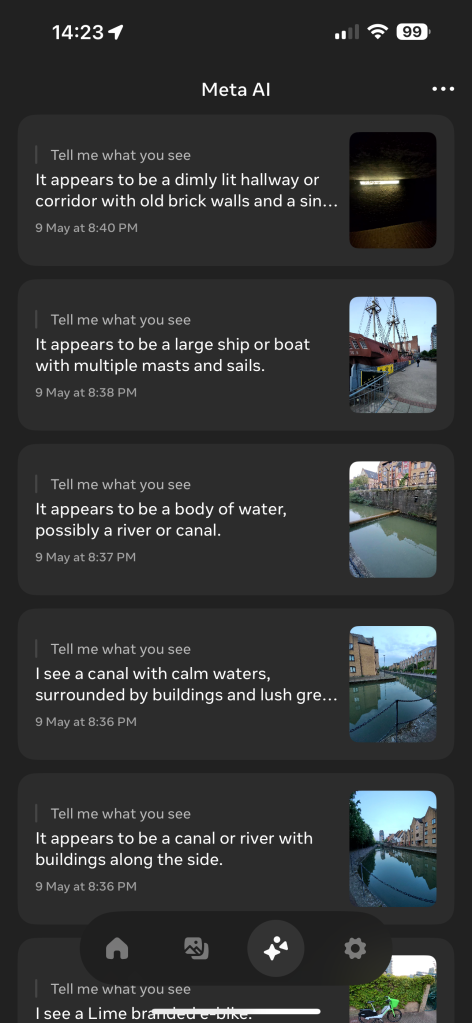

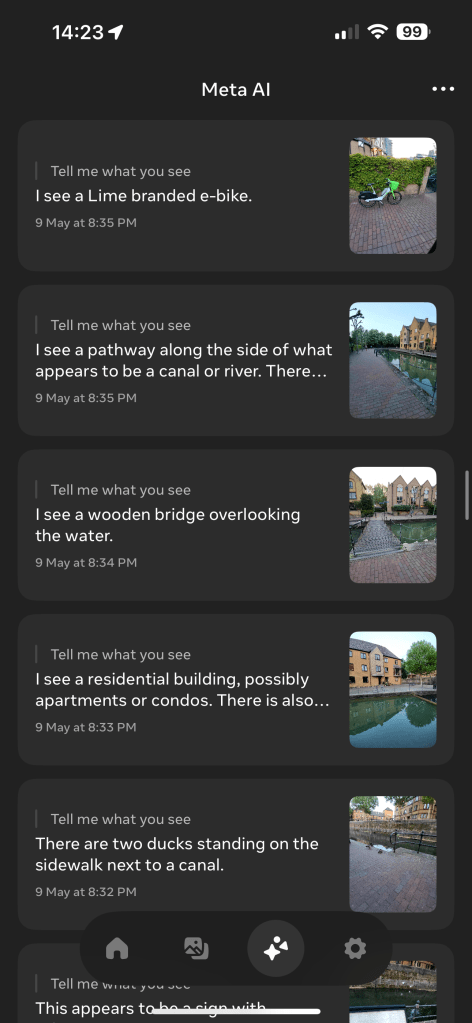

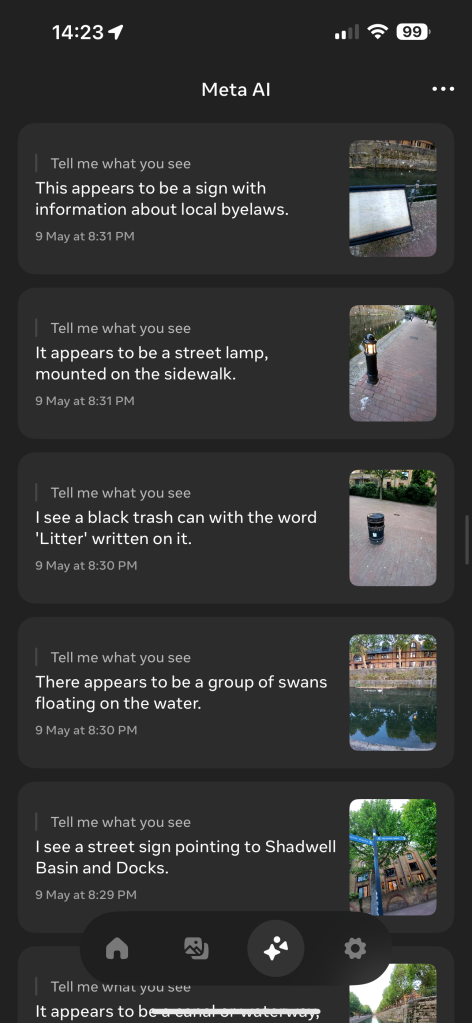

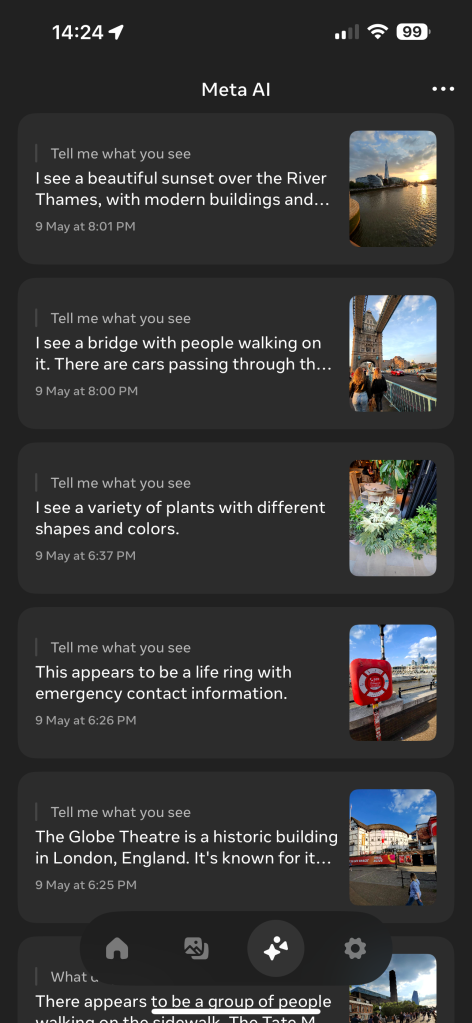

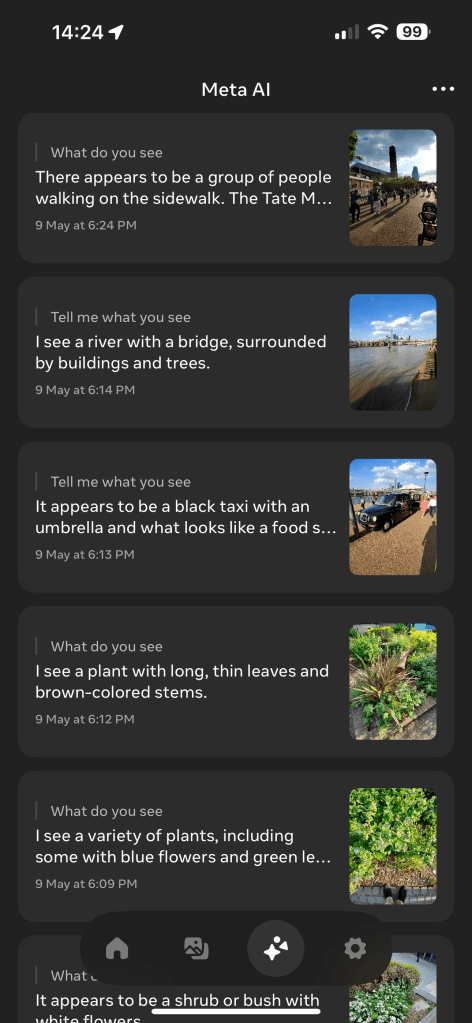

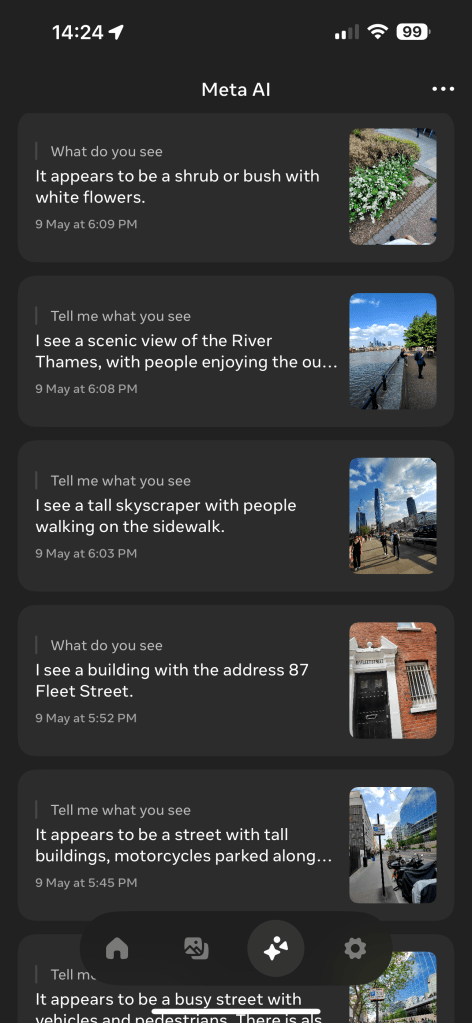

But the really big news since Filipe’s review is that – thanks to a software upgrade – Ray-Ban Meta now offer AI recognition of scenes and objects. This is voice activated, simply saying “Hey Meta, tell me what you see.” (This feature is currently limited to the US, but that’s very easily solved: Force-quit the Meta View App. Activate a VPN connection to the US. Re-open the app. Wait about 30 seconds until prompted to try the AI feature. Accept. At that point, you can end the VPN connection and keep the capability.)

The phone then reads you the result, and – helpfully – also adds both the photo itself and a text transcript of the result to a log in the app.

Processing isn’t done on-device. Instead, the photo is sent to a Meta server, and the results sent back. But I must say I was incredibly impressed by the speed! It typically took about a couple of seconds on a mobile connection (generally 5G in London, but sometimes with fallback to 4G).

I did fairly extensive testing of this feature over a couple of days, and there’s a mix of good and bad news. Let’s start with the bad.

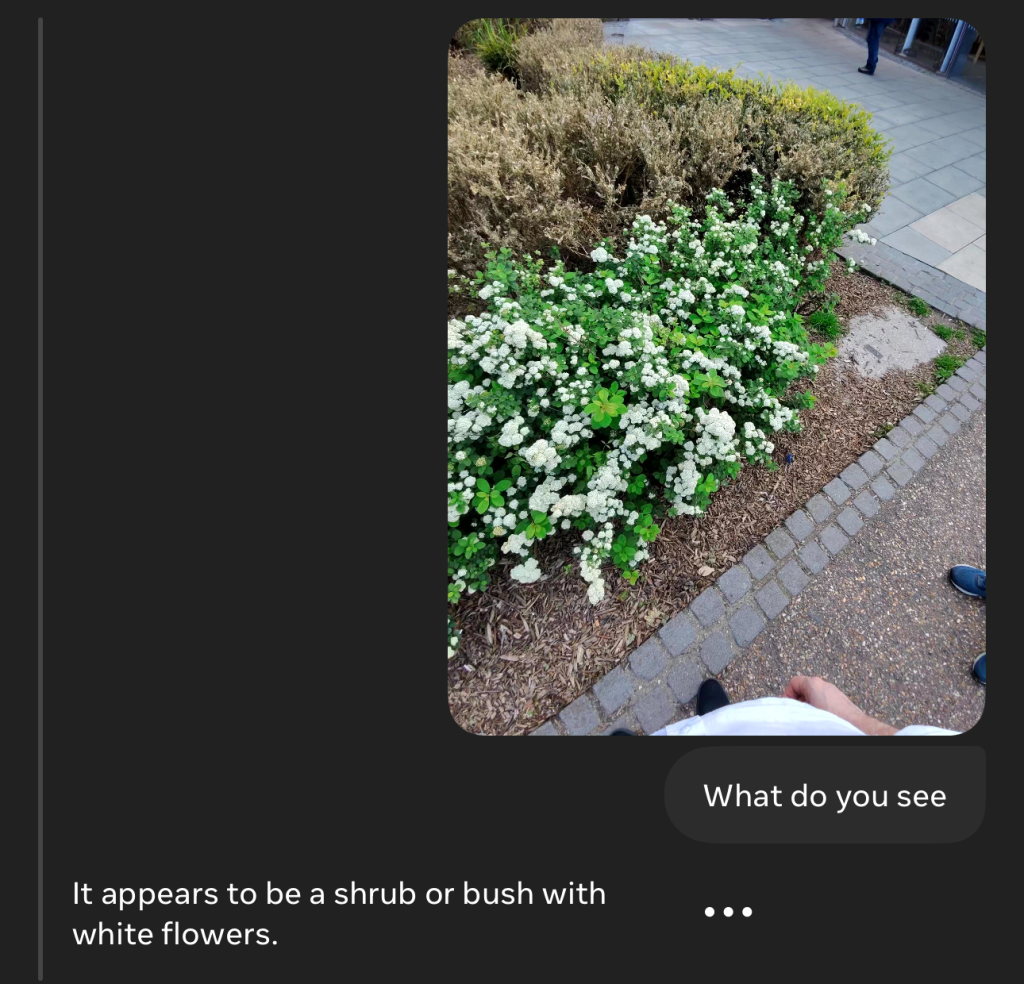

The promise with this type of tech is that we could do things like look at a flower, and have the glasses not only identify it, but also provide information on how best to care for it. Literally every time I tried this with a flower or plant, Meta’s horticultural expertise was identical to mine. Here are a few sample results:

So, uh, yeah – thanks for that.

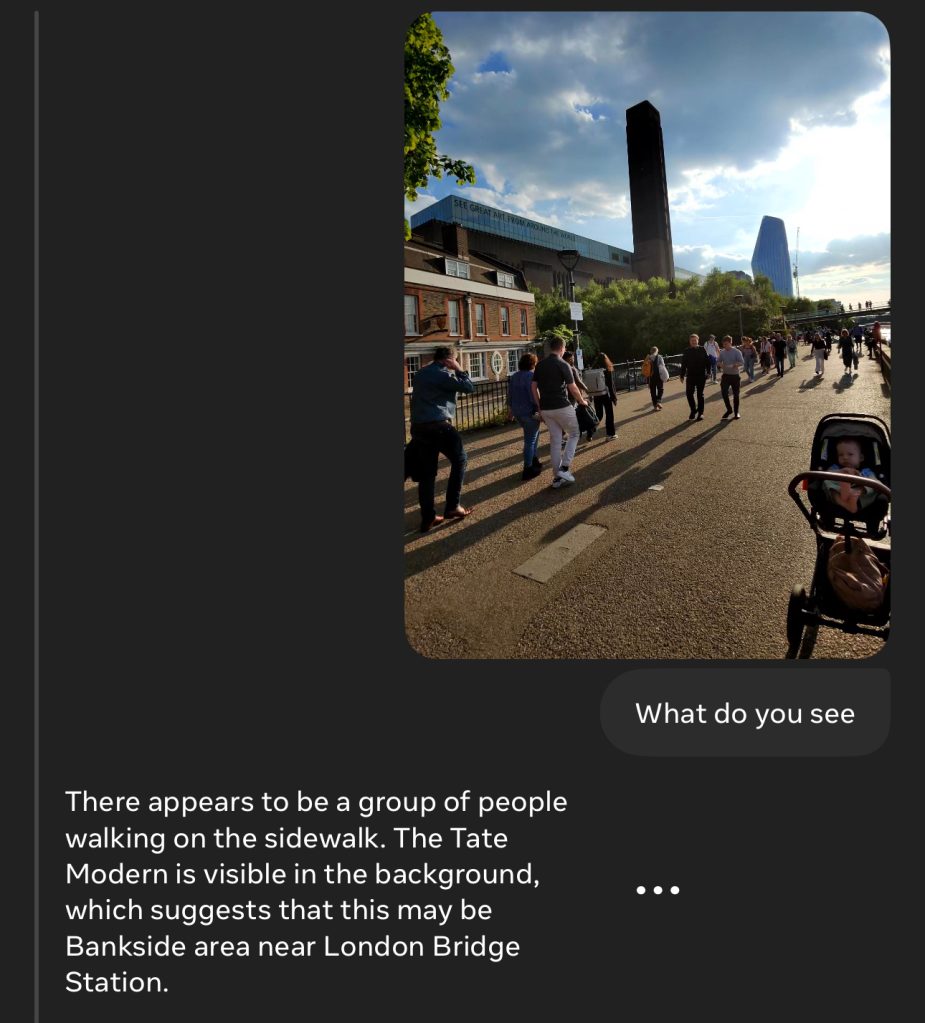

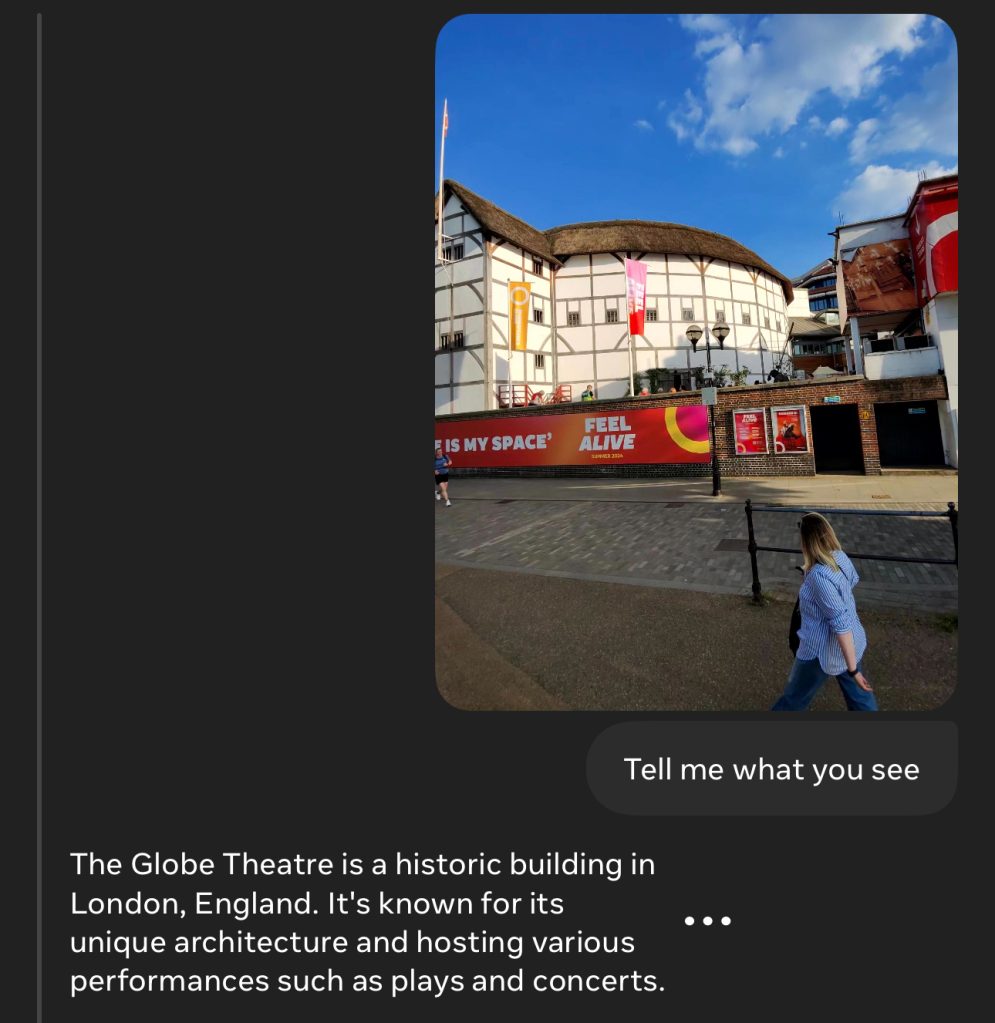

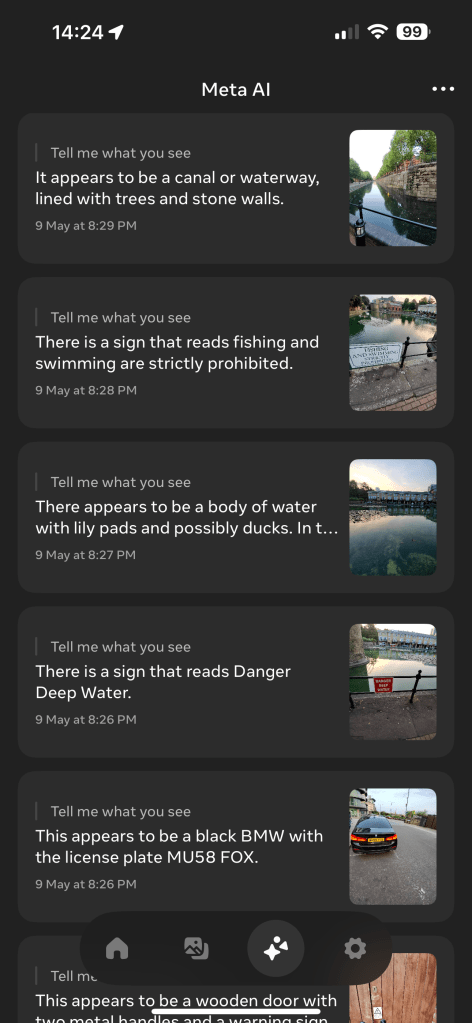

It did slightly better with some iconic London buildings. Meta successfully identified the Tate Modern, and the Globe Theatre.

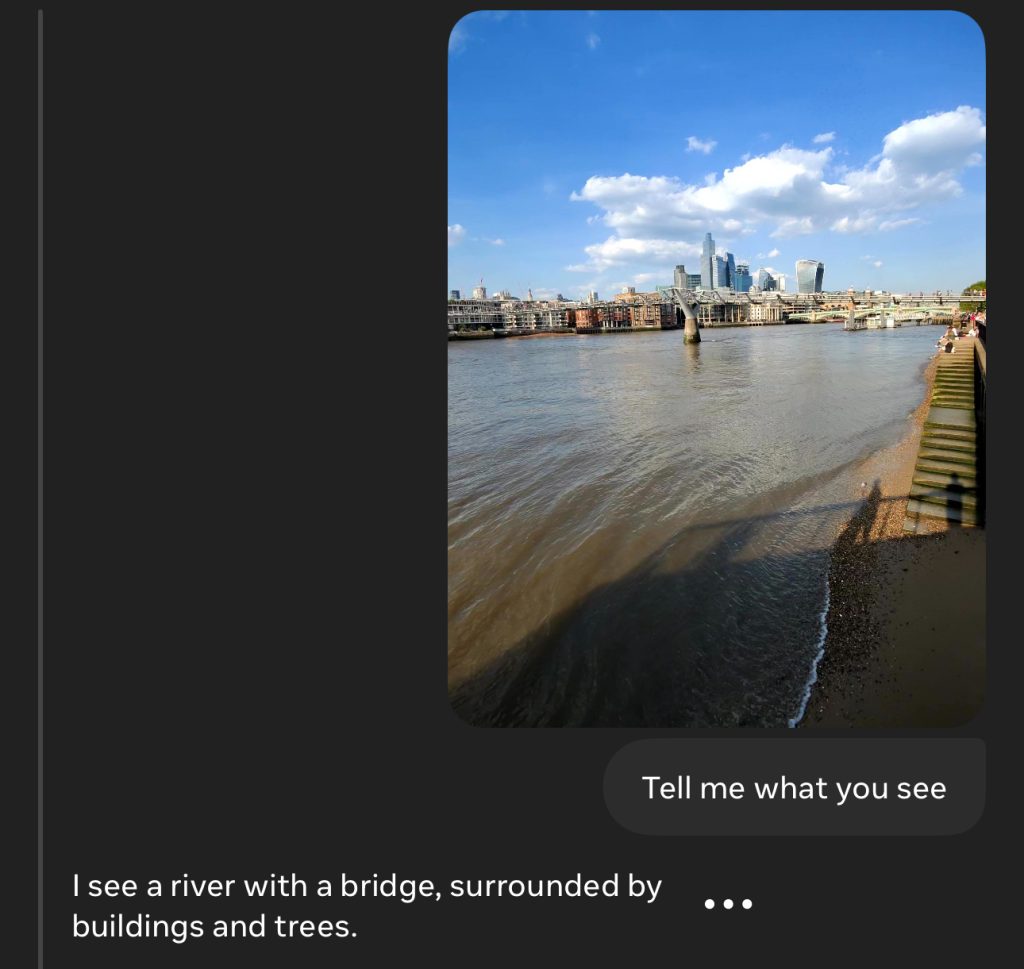

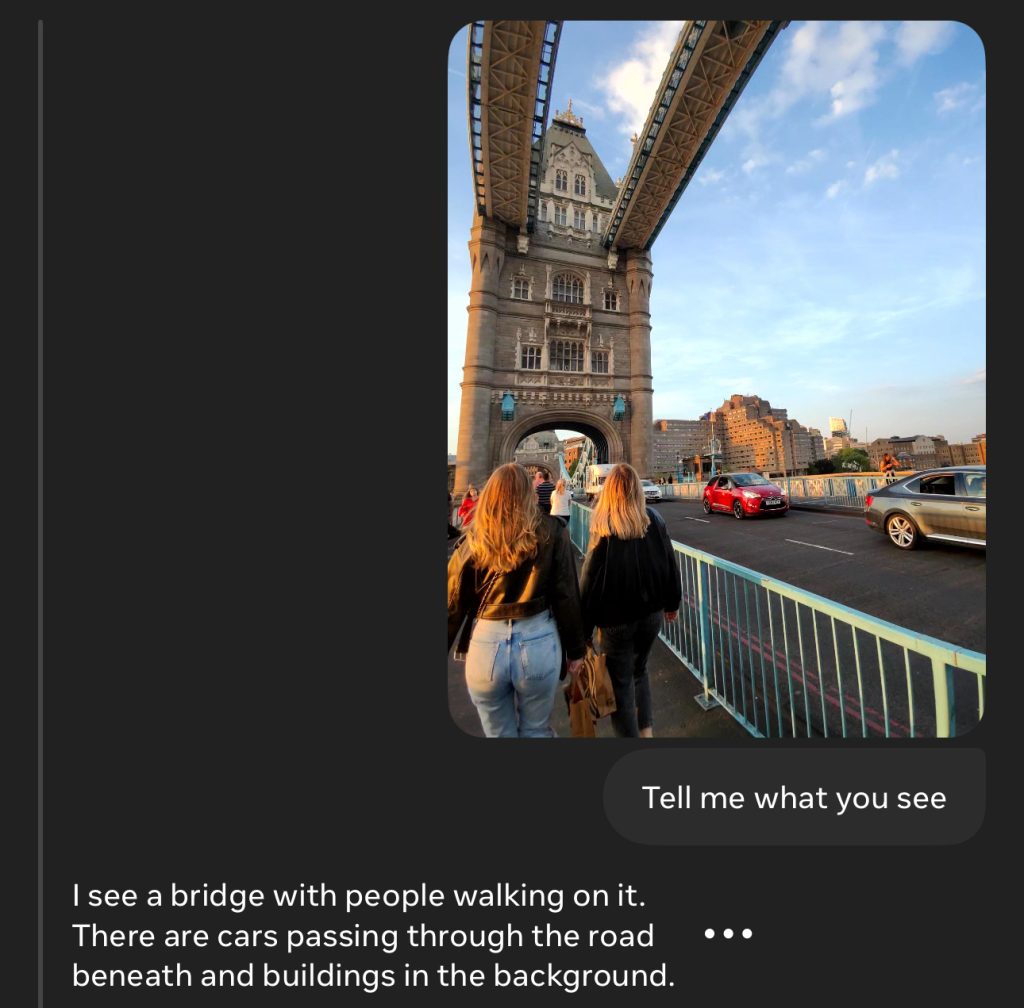

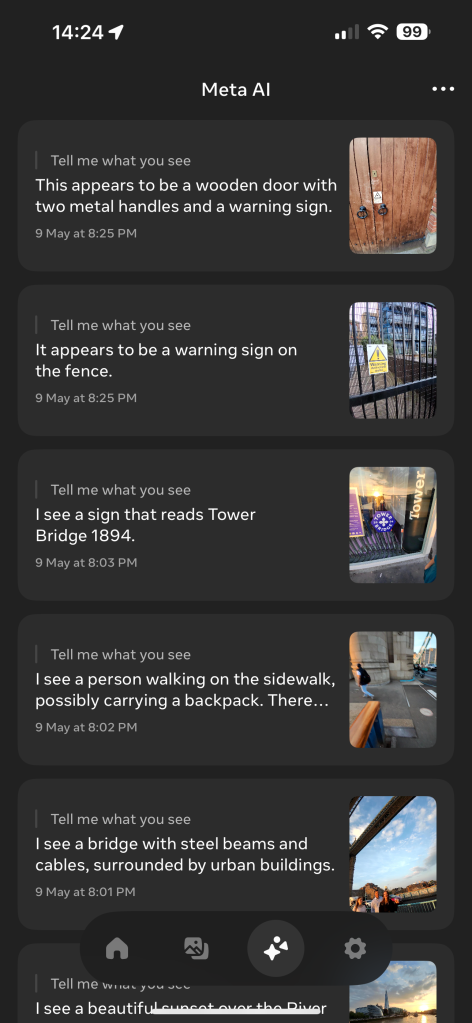

But it failed with Tower Bridge, Millennium Bridge, and others.

Bizarrely, the glasses don’t appear to access the phone’s GPS, which you would have thought would be the logical starting point in identifying a place. For example, this is not only a rather distinctive bridge visually, but I am literally standing on it as I ask Meta to identify it:

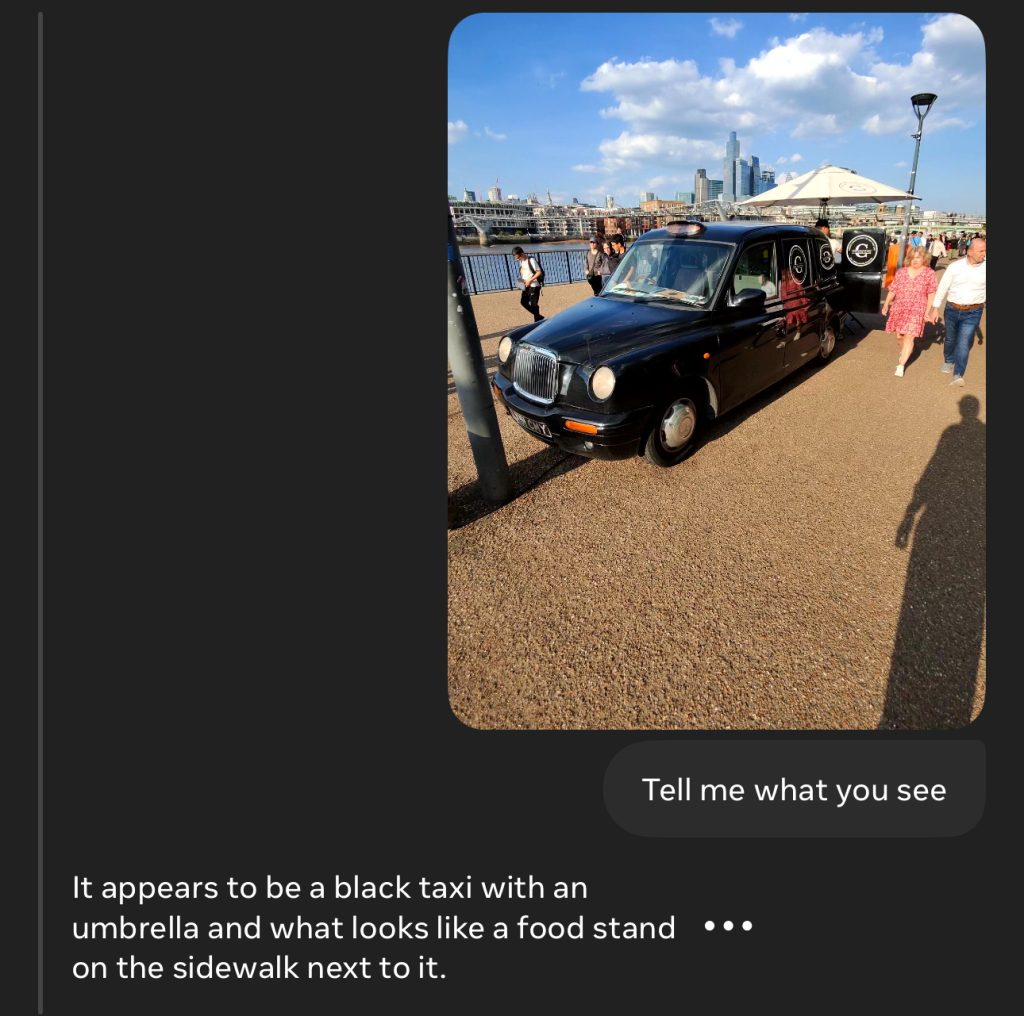

It was sometimes remarkably successful. It didn’t quite figure out that the taxi and the food stand were actually the same thing, but this was still an excellent effort:

Here, it successfully identified the car badge, and correctly transcribed the number plate:

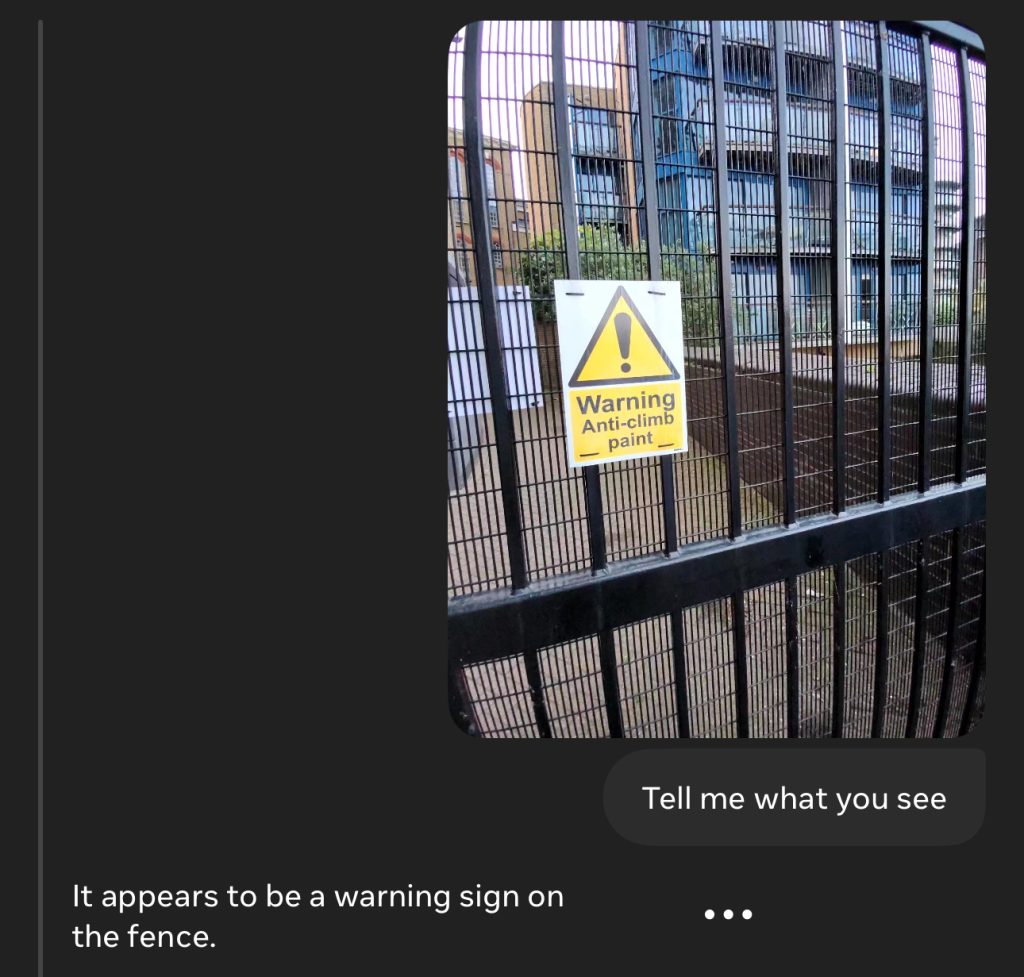

It was hit-and-miss with signs, sometimes reading me the wording, other times not.

In all cases, the very wide-angle camera meant I had to position myself very close to signs. Combine this with the lack of visual framing, and sometimes I got too close.

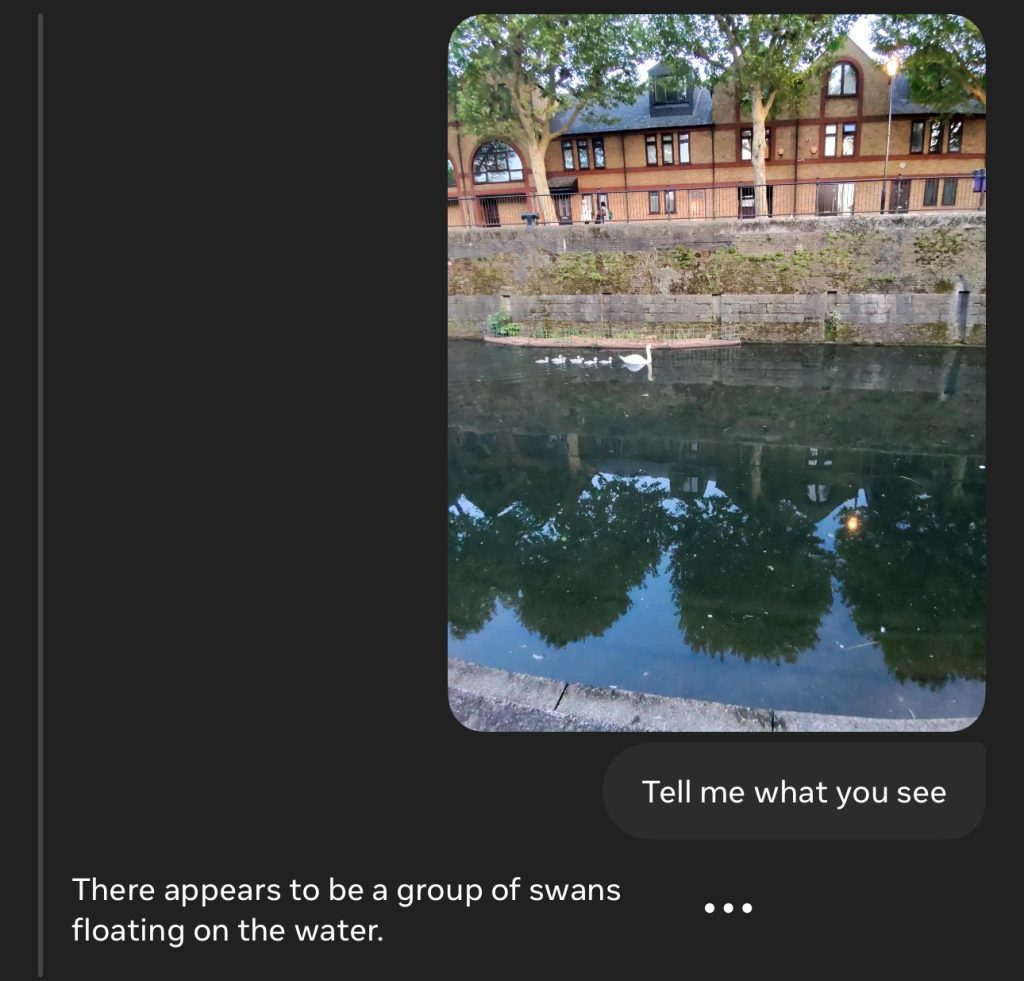

It also did well with ducks and swans.

Click/tap on the frame-grabs in the gallery below to see more examples of where the tech is at currently. Amusingly, the glasses couldn’t identify either themselves, or their charging case!

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>

utton class=”lightbox-trigger” type=”button” aria-haspopup=”dialog” aria-label=”Enlarge image” data-wp-init=”callbacks.initTriggerButton” data-wp-on–click=”actions.showLightbox” data-wp-style–right=”context.imageButtonRight” data-wp-style–top=”context.imageButtonTop”>utton>

Why does this excite me?

A non-techy friend asked the quite reasonable question: What’s the point of the AI stuff, other than for visually-impaired people, or people who can’t read?

But this is an early beta. What most excites me is not the fairly generic descriptions it generally gives today, but the potential for the future.

For example, translating signs in foreign languages while travelling, without having to take my phone from my pocket.

Imagine an integration with Citymapper, where it not only tells me I need the number 77 bus, but it also tells me I need to walk to the next bus stop along, and tells me when it spots the bus.

Look at the outside of a restaurant, and it tells me the average Trip Advisor rating, as well as the recommended dishes.

Or, the dream scenario for someone who suffers from mild facial aphasia (seeing less facial differentiation between faces, making it hard to recognise people): telling me the name of the person walking toward me, and a sentence or two about where and when we last met!

Ray-Ban Meta glasses are a really handy piece of tech at an impressive price. Standard Ray-Ban Wayfarer sunglasses typically cost around $150-190, and these start at $299. So if you’re a Ray-Ban fan already, you’re paying something like $110 to $150 for the tech. That’s honestly a steal.

Even if you wouldn’t normally buy Ray-Ban, I still think $300 for the convenience of instant POV video capture, without having to take your phone from your pocket, is good value. Add in the fact that you get some headphones thrown in (albeit ones I’d only personally use for voice), and it’s a very convenient package at a very decent price.

The AI features are mostly a gimmick at present. There were just too few examples of it giving me genuinely useful information. But it’s a beta, and I do, as I say, feel excited for the future of this.

The main drawback I found is the battery-life. This is claimed to be four hours. I got not much more than half that in one of my tests, when I was really hammering it – using it for AI queries All The Time – so I suspect that’s about right in real-life use. I’ll update later on this.

It’s decent, but you can’t just leave them switched on the whole day, and the charging case is too bulky to carry in your pocket. As soon as you have to think about switching them on only when you need the tech, you immediately lose that instant photo/video convenience. This is, though, a trade-off. A bigger battery would make the glasses heavier, and potentially bulkier, and the normal sunglasses form-factor is absolutely key to their appeal.

I typically wander round my own city without a bag, but I usually have a shoulder bag or backpack when exploring a city while travelling, so for travel use I’d happily throw in the charging case and recharge them while eating or drinking.

Looking ahead to Apple Glasses

Apple Glasses sure won’t cost $300!

A key difference, of course, is that Ray-Ban Meta have no display – just voice input and speaker output. Apple Glasses will certainly have displays for AR functionality, like displaying notifications and overlaying directions for navigation. I’d say it’s a safe bet that these will also be suitable for watching video, which is one of the key things people enjoy about Vision Pro.

Effectively what I’m expecting is something which combine the camera and voice access features of Meta glasses with the video-watching capability of Viture One glasses. When we can combine all of that into a sunglasses format, we really will have a must-have new product category.

How long that will take is the big question. In particular, creating transparent displays which are unobtrusive when walking around, and yet also effectively block outside light when watching video, is the greatest challenge. Right now, I wouldn’t want to walk around when wearing Viture glasses, even if it can technically be done. I certainly wouldn’t want any sense of my vision being obscured when exploring a city.

But Viture glasses work so well they have become my primary way to watch video when alone, and so far I’m not seeing any reason to grab my usual sunglasses as I leave home when I can instead have all the capabilities of the Meta glasses in pretty much the same form-factor. A few years ago, I wouldn’t have expected either to have reached that level of practical appeal and affordability by this point, so who knows – maybe we’ll get Apple Glasses sooner than I’ve so far expected! I’m certainly excited at the prospect.

FTC: We use income earning auto affiliate links. More.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)