On Wednesday, Meta didn’t announce an obvious killer app alongside the $499 Meta Quest 3 headset — unless you count the bundled Asgard’s Wrath 2 or Xbox Cloud Gaming in VR.

But if you watched the company’s Meta Connect keynote and developer session closely, the company revealed a bunch of intriguing improvements that could help devs build a next-gen portable headset game themselves.

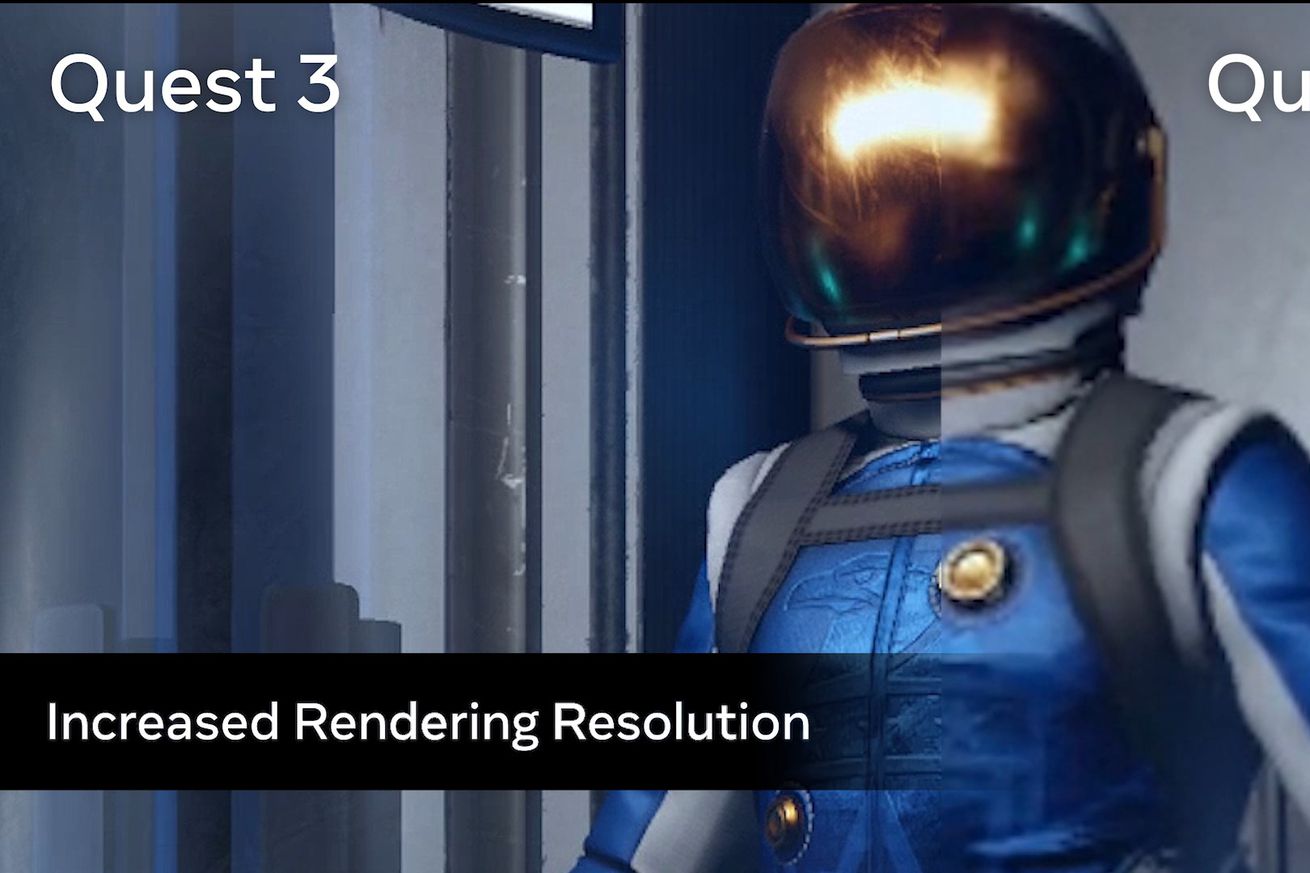

Graphics — look how far we’ve come

This is the obvious one, but it’s also stunning to see just how much better the same games can look on Quest 3 vs. Quest 2. A lot of that’s thanks to the doubled graphical horsepower and increased CPU performance of the Snapdragon XR2 Gen 2, though there’s additional RAM, resolution per eye, and field of view as well:

Image: Meta

At the top of this story, check out the increased render resolution, textures, and dynamic shadows of Red Matter 2. Below, find a similar video of The Walking Dead: Saints & Sinners.

I’m not saying either game looks PS5 or PC quality, but they make the Quest 2 versions look like mud! It’s a huge jump. Meta also confirmed that this Asgard’s Wrath 2 video is Quest 3 graphics.

AI legs

First, virtual Zuck didn’t have legs. Then, he had fake legs. Then, last month, Meta began to walk avatar legs out — in the Quest Home beta, anyhow. Now, Meta says its Movement SDK can give you generative AI legs in theoretically any app or game, creating them using machine learning if developers want to.

Image: Meta; GIF by Sean Hollister / The Verge

I wonder if this tech has… legs.

Technically, the headset and controllers only track your upper body, but Meta uses “machine learning models that are trained on large data sets of people, doing real actions like walking, running, jumping, playing ping-pong, you get it” to figure out where your legs might be. “When the body keeps the center of gravity, legs move like a real body moves,” says Meta’s Rangaprabhu Parthasarathy.

Image: Meta

Give them a hand

Meta has acquired multiple hand-tracking companies over the years, and in 2023, all the M&A and R&D may finally be paying off: we’ve gone from directly “touching” virtual objects to faster hand tracking to a headset where low-latency, low-power feature detection and tracking is now baked right into the Qualcomm chip in a matter of months.

“You can now use hands for even the most challenging fitness experiences,” says Parthasarathy, quoting a 75 percent improvement in the “perceived latency” of fast hand movements.

Image: Meta

Intriguingly, developers can also build games and apps that let you use your hands and controllers simultaneously — no need to switch off. “You can use a controller in one hand while gesturing with the other or poke buttons with your fingers while holding a controller,” says Parthasarathy, now that Meta supports multimodal input:

Image: Meta

Nor will you necessarily need to make big sweeping gestures with your hands for them to be detected — developers can now program microgestures like “microswipes” and taps that don’t require moving an entire hand. In the example above, at right, the person’s finely adjusting where they want to teleport. That’s something that previously required an analog stick or touchpad to do easily.

The mirror universe

These days, lots of headsets attempt to make a digital copy of your surroundings, mapping out your room with a mesh of polygons. The Quest 3 is no exception:

Image: Meta

But its low-latency color passthrough cameras also let you place virtual objects in that mirror world, ones that should just… stay there. “Every time you put on your headset, they’re right where you left them,” says Meta CTO Andrew Bosworth.

Image: Meta, via RoadtoVR

Augments. You can probably still tell which are real and which are digital, but that’s not the point.

He’s talking about Augments, a feature coming to the Quest 3 next year that’ll let developers create life-size artifacts and trophies from your games that could sit on your real-world walls, shelves, and other surfaces.

Pinning objects to real-world coordinates isn’t new for AR devices, but those objects can often drift as you walk around due to imperfect tracking. My colleague Adi Robertson has seen decent pinning from really expensive AR headsets like the Magic Leap 2, so it’ll be pretty cool if Meta has eliminated that drift at $500.

The company’s also offering two new APIs (one coming soon) that let developers make your real-life room a bit more interactive. The Mesh API lets devs interact with that room mesh, letting — in this example below — plants grow out of the floor.

Image: Meta

Meanwhile, the Depth API, coming soon, makes the Quest 3 smart enough to know when a virtual object or character is behind a real-world piece of furniture so they don’t clip through and break the illusion.

Image: Meta

If you look very closely, you can see the current Depth API gets a little hazy around the edges when it’s applying occlusion, and I imagine it might have a harder time with objects that aren’t as clearly defined as this chair, but it could be a big step forward for Meta.

Unity integration for less friction

To help roll out some of the Quest 3’s interactions, Meta now has drag-and-drop “building blocks” for Unity to pull features like passthrough or hand tracking right into the game engine.

Image: Meta

class=”ql-link” href=”https://cdn.vox-cdn.com/uploads/chorus_asset/file/24958327/chrome_fKAbiFKuNV.jpg” target=”_blank” rel=”noopener”>Click for larger image.

Image: Meta

class=”ql-link” href=”https://cdn.vox-cdn.com/uploads/chorus_asset/file/24958331/chrome_W14GvxlBAi.jpg” target=”_blank” rel=”noopener”>Click for larger image.

Image: Meta

The Meta XR Simulator.

The company’s also launching an app to preview what passthrough games and apps will look like across Quest headsets. It’s called the Meta XR Simulator.

Disclaimer

We strive to uphold the highest ethical standards in all of our reporting and coverage. We StartupNews.fyi want to be transparent with our readers about any potential conflicts of interest that may arise in our work. It’s possible that some of the investors we feature may have connections to other businesses, including competitors or companies we write about. However, we want to assure our readers that this will not have any impact on the integrity or impartiality of our reporting. We are committed to delivering accurate, unbiased news and information to our audience, and we will continue to uphold our ethics and principles in all of our work. Thank you for your trust and support.

![[CITYPNG.COM]White Google Play PlayStore Logo – 1500×1500](https://startupnews.fyi/wp-content/uploads/2025/08/CITYPNG.COMWhite-Google-Play-PlayStore-Logo-1500x1500-1-630x630.png)